Available with Spatial Analyst license.

Available with Image Analyst license.

Assessing the accuracy of the classification and interpreting the results are discussed below.

Assess the accuracy of the classification

The Accuracy Assessment tool uses a reference dataset to determine the accuracy of a classified result. The values of the reference dataset must match the schema. Reference data can be in the following formats:

- A raster dataset that is a classified image.

- A polygon feature class or a shapefile. The format of the feature class attribute table must match the training samples. To ensure this, you can create the reference dataset using the Training Samples Manager page to read and write the dataset.

- A point feature class or a shapefile. The format must match the output of the Create Accuracy Assessment Points tool. If you are using an existing file and want to convert it to the appropriate format, use the Create Accuracy Assessment Points tool.

To perform accuracy assessment, two parameters must be specified: Number of Random Points and Sampling Strategy.

Number of Random Points

The Number of Random Points parameter shows the total number of random points that will be generated. The actual number can exceed but not fall below this number, depending on the sampling strategy and number of classes. The default number of randomly generated points is 500.

Sampling Strategy

Specify a sampling method to use.

- Stratified Random—Create points that are randomly distributed within each class, and each class has a number of points proportional to its relative area. This method samples the image so that the number of sample points per class is proportional to the area per class in the image. The final accuracy assessment result is representative of the image. This is the default.

- Equalized Stratified Random—Create points that are randomly distributed within each class, and each class has the same number of points. This method assigns the same number of sample points to each class, regardless of how much area each class has in the image. The final accuracy assessment result is not representative of the image, but measures the accuracy by giving the same weight to each class. This is an alternative method to stratified random sampling for accuracy assessment.

- Random—Create points that are randomly distributed throughout the image. This method samples the points randomly over the image. There is no stratification involved, so the final accuracy assessment result is representative of the image; however, when a small number of sample points are used, some classes representing small areas may be missed or under-represented.

Understand the results

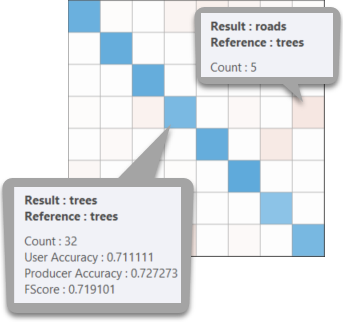

Once you run the tool, a graphical representation of the confusion matrix is shown. Hover over a cell to see the Count, User Accuracy, Producer Accuracy, and FScore values. The kappa score is also displayed at the bottom of the pane. The output table is added to the Contents pane.

Analyze the diagonal

Accuracy is represented from 0 to 1, with 1 being 100 percent accuracy. The Producer Accuracy and User Accuracy values for all of the classes are indicated along the diagonal axis. The color along the diagonal ranges from light to dark blue, with darker blue indicating higher accuracy. When you hover over each cell, the values for each accuracy and an F score are shown.

Unlike the diagonal, the colored cells off the diagonal indicate the number of confused class values present in the confusion matrix. When you hover over the cells, the confusion matrix results for each pairing of classes is shown.

View the output confusion matrix

To examine the details of the error report, you can load the report into the Contents pane and open it. It is a .dbf file located in the project or in the output folder you specified. The confusion matrix table lists the user's accuracy (U_Accuracy column) and producer's accuracy (P_Accuracy column) for each class, as well as an overall kappa statistic index of agreement. These accuracy rates range from 0 to 1 in which 1 represents 100 percent accuracy. The following is an example of a confusion matrix:

| c_1 | c_2 | c_3 | Total | U_Accuracy | Kappa | |

|---|---|---|---|---|---|---|

c_1 | 49 | 4 | 4 | 57 | 0.8594 | 0 |

c_2 | 2 | 40 | 2 | 44 | 0.9091 | 0 |

c_3 | 3 | 3 | 59 | 65 | 0.9077 | 0 |

Total | 54 | 47 | 65 | 166 | 0 | 0 |

P_Accuracy | 0.9074 | 0.8511 | 0.9077 | 0 | 0.8916 | 0 |

Kappa | 0 | 0 | 0 | 0 | 0 | 0.8357 |

The user's accuracy column shows false positives, or errors of commission, in which pixels are incorrectly classified as a known class when they should have been classified as something else. User's accuracy is also referred to as a Type 1 error. The data to compute this error rate is read from the rows of the table. The user’s accuracy is calculated by dividing the total number of classified points that match the reference data by the total number of classified points for that class. The Total row shows the number of points that should have been identified as a given class according to the reference data. An example is when the classified image identifies a pixel as asphalt, but the reference data identifies it as forest. The asphalt class contains extra pixels that it should not have according to the reference data.

The producer's accuracy column shows false negatives, or errors of omission. The producer's accuracy indicates how accurately the classification results meet the expectation of the creator. Producer’s accuracy is also referred to as a Type 2 error. The data to compute this error rate is read in the columns of the table. The producer’s accuracy is calculated by dividing the total number of classified points that match the reference data by the total number of reference points for that class. These values are false-negative values in the classified results. The Total column shows the number of points that were identified as a given class according to the classified map. An example is when the reference data identifies a pixel as asphalt, but the classified image identifies it as forest. The asphalt class does not contain enough pixels according to the reference data.

Kappa statistic of agreement provides an overall assessment of the accuracy of the classification.

Intersection over Union (IoU) is the area of overlap between the predicted segmentation and the ground truth divided by the area of union between the predicted segmentation and the ground truth. The mean IoU value is computed for each class.