Available with Image Analyst license.

A deep learning model is a computer model that is trained using training samples and deep learning neural networks to perform various tasks such as object detection, pixel classification, detect changes, and objects classification.

You can group deep learning models into three categories in ArcGIS:

- ArcGIS pretrained models

- Models trained using ArcGIS

- Custom models

ArcGIS pretrained models

ArcGIS pretrained models automate the task of digitizing and extracting geographical features from imagery and point cloud datasets.

Manually extracting features from raw data, such as digitizing footprints or generating land-cover maps, is time consuming. Deep learning automates the process and minimizes the manual interaction necessary to complete these tasks. However, training a deep learning model can be complicated, as it requires large quantities of data, computing resources, and knowledge of deep learning.

With ArcGIS pretrained models, you do not need to invest time and effort into training a deep learning model. The ArcGIS models have been trained on data from a variety of geographies. As new imagery becomes available to you, you can extract features and produce layers of GIS datasets for mapping, visualization, and analysis. The pretrained models are available on ArcGIS Living Atlas of the World if you have an ArcGIS account.

Models trained using ArcGIS

For various reasons, you may need to train your own models. An example of such a scenario is when the available ArcGIS pretrained model is trained for a geographic region that is different from your area of interest. In such situations, you can use the Train Deep Learning Model tool. Training your own model requires additional steps, but it typically gives the best result for a specific area of interest and use case. The tool trains a deep learning model using the output from the Export Training Data For Deep Learning tool. Both tools support most popular deep learning metadata formats and model architectures.

For more information about the various metadata formats, refer to the Export Training Data For Deep Learning tool documentation. For more information about the various models types, refer to the Train Deep Learning Model tool documentation.

Custom models

Deep learning model inferencing in ArcGIS is implemented on top of the Python raster function framework. Many deep learning models trained outside of ArcGIS can be used in ArcGIS for inferencing; however, this requires the inference function to be customized and the correct packages to be installed that support the model. The raster-deep-learning repository provides guidance on deep learning Python raster functions in ArcGIS, as well as how to create custom Python raster functions to integrate additional deep learning models in ArcGIS. These models are considered custom models.

Deep learning model contents

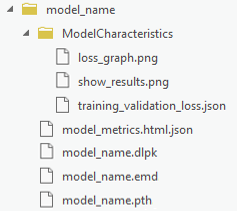

A typical model trained in ArcGIS contains the folder and files shown in the following image and listed below:

- loss_graph.png—Shows the train and validation loss for the batches processed. This is used in the model_metrics.html file.

- show_results.png—Shows sample results for the model. This is used in the model_metrics.html file.

- training_validation_loss.json—Shows the training and validation loss per epoch.

- model_metrics.html—Contains information about the trained model such as the learning rate, training and validation loss, and sample results.

- model_name.pth—Contains model weights.

Depending on the model architecture, the model folder may contain additional supporting files such as ModelConfiguration.py.

Esri model definition file

The Esri model definition file (.emd) is a JSON format file that describes the trained deep learning model. It contains model definition parameters that are required to run the inference tools, and it should be modified by the data scientist who trained the model. There are required and optional parameters in the file, as described in the table below.

Once the .emd file is completed and verified, it can be used in inferencing multiple times, as long as the input imagery is from the same sensor as the original model input, and the classes or objects being detected are the same. For example, an .emd file that was defined with a model to detect oil well pads using Sentinel-2 satellite imagery can be used to detect oil well pads across multiple areas of interest and multiple dates using Sentinel-2 imagery.

Some parameters are used by all the inferencing tools and are listed in the table below. Some parameters are only used with specific tools, such as the CropSizeFixed and BlackenAroundFeature parameters, which are only used by the Classify Objects Using Deep Learning tool.

| Model definition file parameter | Description |

|---|---|

Framework | The name of a deep learning framework used to train a model. Examples of supported deep learning frameworks include the following:

If the model is trained using a deep learning framework that is not listed in supported frameworks, a custom inference function (a Python module) is required with the trained model, and you must set InferenceFunction to the Python module path. |

ModelConfiguration | The name of the model configuration. The model configuration defines the model inputs and outputs, the inferencing logic, and the assumptions made about the model inputs and outputs. Existing open source deep learning workflows define standard input and output configuration and inferencing logic. ArcGIS supports the following set of predefined configurations:

If you used one of the predefined configurations, type the name of the configuration in the .emd file. If you trained the deep learning model using a custom configuration, you must describe the inputs and outputs in full in the .emd file or in the custom Python file. |

ModelType | The type of model. The options are as follows:

|

ModelFile | The path to a trained deep learning model file. The file format depends on the model framework. For example, in TensorFlow, the model file is a .pb file. |

Description | Information about the model. Model information can include anything to describe the model you trained. Examples include the model number and name, time of model creation, performance accuracy, and so on. |

InferenceFunction (Optional) | The path of the inference function. An inference function understands the trained model data file and provides the inferencing logic. The following inference functions are supported in the ArcGIS Pro deep learning geoprocessing tools:

|

SensorName (Optional) | The name of the sensor used to collect the imagery from which training samples were generated. |

RasterCount (Optional) | The number of rasters used to generate the training samples. |

BandList (Optional) | The list of bands used in the source imagery. |

ImageHeight (Optional) | The number of rows in the image being classified or processed. |

ImageWidth (Optional) | The number of columns in the image being classified or processed. |

ExtractBands (Optional) | The band indexes or band names to extract from the input imagery. |

Classes (Optional) | Information about the output class categories or objects. |

DataRange (Optional) | The range of data values if scaling or normalization was done in preprocessing. |

ModelPadding (Optional) | The amount of padding to add to the input imagery for inferencing. |

BatchSize (Optional) | The number of training samples to be used in each iteration of the model. |

PerProcessGPUMemoryFraction (Optional) | The fraction of GPU memory to allocate for each iteration in the model. The default is 0.95, or 95 percent. |

MetaDataMode (Optional) | The format of the metadata labels used for the image chips. |

ImageSpaceUsed (Optional) | The type of reference system used to train the model. The options are as follows:

|

WellKnownBandNames (Optional) | The names of each input band, in order of band index. Bands can then be referenced by these names in other tools. |

AllTileStats | The statistics of each band in the training data. |

The following is an example of a model definition file that uses a standard model configuration:

{

"Framework": "TensorFlow",

"ModelConfiguration": "ObjectDetectionAPI",

"ModelFile":"C:\\ModelFolder\\ObjectDetection\\tree_detection.pb",

"ModelType":"ObjectionDetection",

"ImageHeight":850,

"ImageWidth":850,

"ExtractBands":[0,1,2],

"Classes" : [

{

"Value": 0,

"Name": "Tree",

"Color": [0, 255, 0]

}

]

}The following is an example of a model definition file with additional optional parameters in the configuration:

{

"Framework": "PyTorch",

"ModelConfiguration": "FasterRCNN",

"ModelFile":"C:\\ModelFolder\\ObjectDetection\\river_detection.pb",

"ModelType":"ObjectionDetection",

"Description":"This is a river detection model for imagery",

"ImageHeight":448,

"ImageWidth":448,

"ExtractBands":[0,1,2,3],

"DataRange":[0.1, 1.0],

"ModelPadding":64,

"BatchSize":8,

"PerProcessGPUMemoryFraction":0.8,

"MetaDataMode" : "PASCAL_VOC_rectangles",

"ImageSpaceUsed" : "MAP_SPACE",

"Classes" : [

{

"Value": 1,

"Name": "River",

"Color": [0, 255, 0]

}

],

"InputRastersProps" : {

"RasterCount" : 1,

"SensorName" : "Landsat 8",

"BandNames" : [

"Red",

"Green",

"Blue",

"NearInfrared"

]

},

"AllTilesStats" : [

{

"BandName" : "Red",

"Min" : 1,

"Max" : 60419,

"Mean" : 7669.720049855654,

"StdDev" : 1512.7546387966217

},

{

"BandName" : "Green",

"Min" : 1,

"Max" : 50452,

"Mean" : 8771.2498195125681,

"StdDev" : 1429.1063589515179

},

{

"BandName" : "Blue",

"Min" : 1,

"Max" : 47305,

"Mean" : 9306.0475897744163,

"StdDev" : 1429.380049936676

},

{

"BandName" : "NearInfrared",

"Min" : 1,

"Max" : 60185,

"Mean" : 17881.499184561973,

"StdDev" : 5550.4055277121679

}

],

}Deep learning model package

A deep learning model package (.dlpk) contains the files and data required to run deep learning inferencing tools for object detection or image classification. The package can be uploaded to your portal as a DLPK item and used as the input to deep learning raster analysis tools.

Deep learning model packages must contain an Esri model definition file (.emd) and a trained model file. The trained model file extension depends on the framework you used to train the model. For example, if you trained your model using TensorFlow, the model file will be a .pb file, while a model trained using Keras will generate an .h5 file. Depending on the model framework and options you used to train your model, you may need to include a Python Raster Function (.py) or additional files. You can include multiple trained model files in a single deep learning model package.

You can open most packages in any version of ArcGIS Pro. By default, the contents of a package are stored in the <User Documents>\ArcGIS\Packages folder. You can change this location in the Share and download options. If the ArcGIS Pro version used to open a package does not support the functionality in the package, the functionality will not be available.

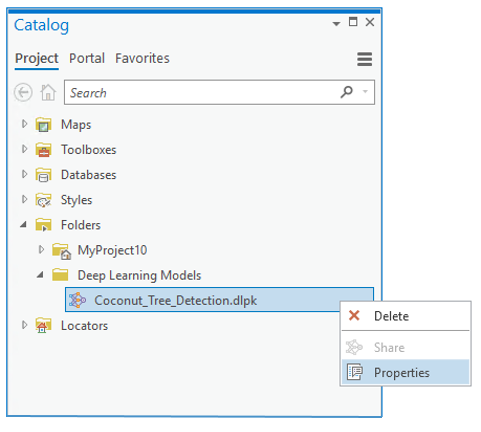

To view or edit the properties of a .dlpk package, or to add or remove files from a .dlpk package, right-click the .dlpk package in the Catalog pane and click Properties.

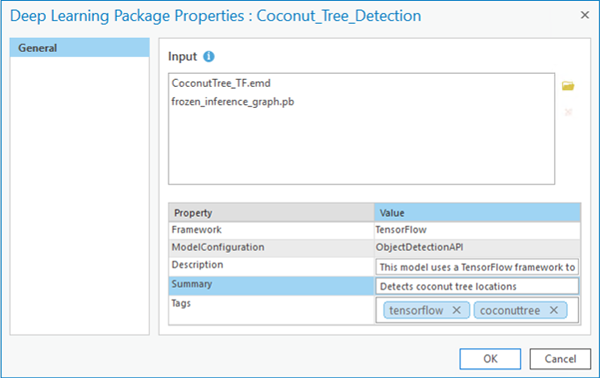

Properties include the following information:

- Input—The .emd file, trained model file, and any additional files that may be required to run the inferencing tools.

- Framework—The deep learning framework used to train the model.

- ModelConfiguration—The type of model training performed (object detection, pixel classification, or feature classification).

- Description—A description of the model. This is optional and editable.

- Summary—A brief summary of the model. This is optional and editable.

- Tags—Any tags used to identify the package. This is useful for .dlpk package items stored on your portal.

Any property that is edited in the Properties window is updated when you click OK. If the .dlpk package item is being accessed from your portal in the Catalog pane, the portal item is updated.

For information about how to create a .dlpk package, see Share a deep learning model package.