Available with Image Analyst license.

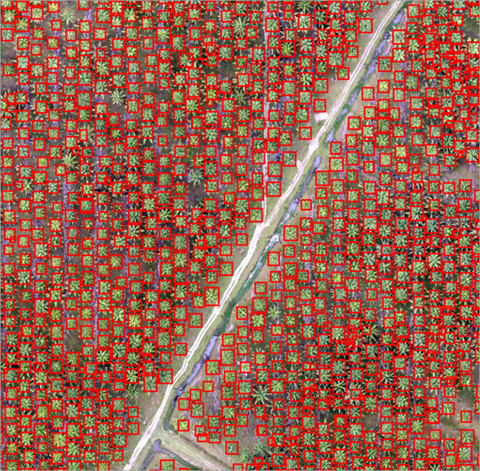

To detect objects on input imagery, use the Detect Objects Using Deep Learning tool, which generates bounding boxes around the objects or features in an image to identify their location. The following image is an example that detects palm trees using the deep learning tools in ArcGIS:

A typical object detection workflow using deep learning consists of three main steps:

- Create and export training samples. Create training samples using the Label Objects for Deep Learning pane, and use the Export Training Data For Deep Learning tool to convert the samples to deep learning training data.

- Train the deep learning model. Use the Train Deep Learning Model tool to train a model using the training samples you created in the previous step.

- Perform inferencing—Use the Detect Objects Using Deep Learning tool. You will use the model you created in step 2.

Once the object detection workflow has been completed, it is recommended that you perform quality control analysis and review the results. If you are not satisfied with the results, you can improve the model by adding more training samples and perform the workflow again. To help you review the results, use the Attributes pane. To help validate the accuracy of the results, use the Compute Accuracy For Object Detection tool.

For more examples, supported metadata formats, and model type architectures, see Deep learning model architectures.

Create and export training samples

Create a training schema and training samples, and export the training data.

If you have existing training samples in a raster dataset or a feature class, you can use the Export Training Data For Deep Learning tool and proceed to the Train a deep learning model section below.

- Create a training schema.

- Add the image to be used to generate the training samples to a map.

- In the Contents pane, select the image that you added.

- Click the Imagery tab.

- Click Deep Learning Tools, and click Label Objects for Deep Learning.

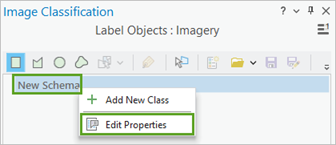

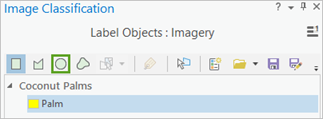

The Image Classification pane appears with a blank schema.

- In the Image Classification pane, right-click New Schema and click Edit Properties.

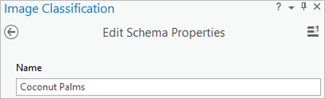

- Provide a name for the schema.

- Click Save.

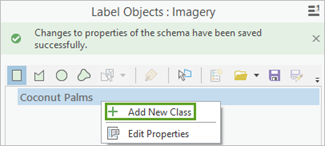

The schema is renamed in the Image Classification pane, and you can add classes to it.

- Add a new class to the schema.

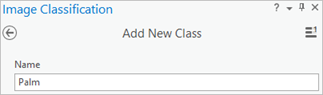

- Right-click the schema you created, and click Add New Class.

- Provide a name for the class.

- Provide a value for the class.

The value cannot be 0.

- Optionally, choose a color for the class.

- Click OK.

The class is added to the schema in the Image Classification pane.

- Optionally, repeat steps 2a through 2e to add more classes.

- Right-click the schema you created, and click Add New Class.

- Create training samples.

- Choose a drawing tool, such as the circle, and use it to draw a boundary around an object.

This palm tree training sample was created using the Circle tool. If you are not sure about an object, do not include it. Boundaries can overlap.

- Use the drawing tools to select more features.

Since the final model will take into account the size of the objects you identify, you can select objects of various sizes.

These training samples were created in various areas and have different sizes to show a variety of samples.

- Choose a drawing tool, such as the circle, and use it to draw a boundary around an object.

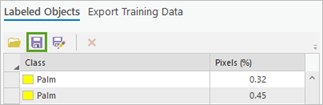

- When you finish creating training samples, click Save in the Image Classification pane.

- In the Save current training sample window, browse to the geodatabase.

- Provide a name for the feature class, and click Save.

Before you can train the model, you must export the training samples as image chips. An image chip is a small image that contains one or more objects to be detected.

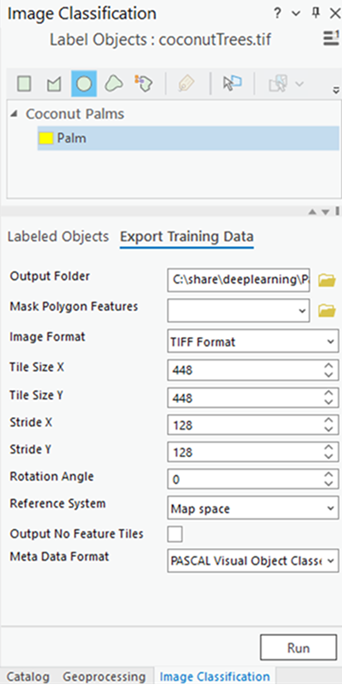

- In the Image Classification pane, click the Export Training Data tab and do the following:

- Provide the Output Folder value.

This is the path and name of the folder where the output image chips and metadata will be stored.

- Optionally, provide the Mask Polygon value.

This is a polygon feature class that delineates the area where image chips can be created. Only image chips that are completely within the polygon will be created.

- Optionally, choose the Image Format value for the chips.

Supported formats are TIFF, PNG, JPEG, and MRF.

- Optionally, specify Tile Size X and Tile Size Y values.

These are the x dimension and y dimension of the image chips. The default values typically produce good results. Generally the same size is specified for both dimensions.

- Optionally, specify Stride X and Stride Y values.

This is the distance to move in the x direction and y direction when creating the next image chip. When stride is equal to tile size, there is no overlap. When stride is equal to half the tile size, there is 50 percent overlap.

- Optionally, specify the Rotation Angle value.

The image chip is rotated at the specified angle to create additional image chips. An additional image chip is created for every angle until the image chip has fully rotated. For example, if you specify a rotation angle of 45 degrees, eight image chips are created. These image chips are created for the following angles: 45, 90, 135, 180, 225, 270, and 315 degrees.

- Optionally, choose the Reference System value.

This is the reference system that will be used to interpret the input image. The options are Map Space or Pixel Space. Map space uses a map-based coordinate system, which is the default when the input raster has a spatial reference defined. Pixel space uses an image space coordinate system, which has no rotation or distortion. The Pixel Space option is recommended when the input imagery is oriented or oblique. The specified reference system must match the reference system used to train the deep learning model.

- Optionally, check the Output No Feature Tiles check box.

When this option is checked, all image chips are exported, including those that do not capture training samples. When this option is left unchecked, only image chips that capture training samples are exported. The default is unchecked.

- Optionally, choose the Meta Data

Format value.

The metadata formats supported for object detection are Labeled Tiles, Pascal Visual Object Classes, and RCNN Masks.

- Click Run to export the training data.

- Provide the Output Folder value.

Train a deep learning model

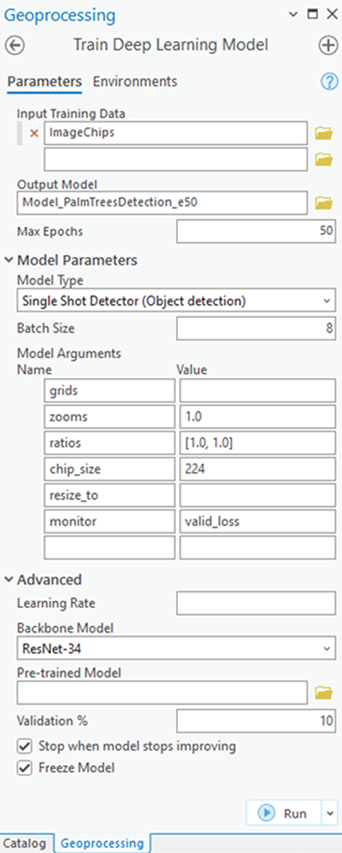

The Train Deep Learning Model tool uses the labeled image chips to determine the combinations of pixels in each image to represent the object. You will use these training samples to train a deep learning model. In the tool, only the Input Training Data and Output Model parameters are required.

Since the input training data is based on the Meta Data Format value, an appropriate Model Type value will be assigned by default. For example, if you specified the Pascal Visual Objects Classes metadata format in the export process, the Model Type value will be Single Shot Detector. The drop-down list of Model Types parameters will also update with the model types that support the Pascal Visual Objects Classes metadata format. The Batch Size, Model Arguments, and Backbone Model parameters are populated based on the Model Type value.

- Open the Train Deep Learning Model tool.

- For the Input Training Data parameter, browse to and select the training data folder where the image chips are stored.

- For the Output Model parameter, provide the file path and name of the folder where the output model will be saved after training.

- Optionally, specify a value for the Max Epochs parameter.

An epoch is a full cycle through the training dataset. During each epoch, the training dataset you stored in the image chips folder is passed forward and backward through the neural network one time. Generally, 20 to 50 epochs are used for initial review. The default value is 20. If the model can be further improved, you can retrain it using the same tool.

- Optionally, change the Model Type parameter value in the drop-down list.

The model type determines the deep learning algorithm and neural network that will be used to train the model, such as the Single Shot Detector (SSD) option. For more information about models, see Deep Learning Models in ArcGIS . For documentation examples, supported metadata, and model architecture details, see Deep learning model architectures.

- Optionally, change the Model Arguments parameter value.

The Model Arguments parameter is populated with information from the model definition. These arguments vary depending on the model architecture that was specified. A list of model arguments supported by the tool are available in the Model Arguments parameter.

- Optionally, set the Batch Size parameter value.

This parameter determines the number of training samples that will be trained at a time. A batch size value can be determined by various factors such as number of image chips, GPU memory (if GPU is used), and learning rate, if a custom value is used. Typically, the default batch size produces good results.

- Optionally, provide the Learning Rate parameter value.

If no value is provided, the optimal learning rate is extracted from the learning curve during the training process.

- Optionally, specify the Backbone Model parameter value.

The default value is based on the model architecture. You can change the default backbone model using the drop-down list.

- Optionally, provide the Pre-trained Model parameter value.

A pretrained model with similar classes can be fine-tuned to fit the new model. The pretrained model must have been trained with the same model type and backbone model that will be used to train the new model.

- Optionally, change the Validation % parameter value.

This is the percentage of training samples that will be used to validate the model. This value depends on various factors such as the number of training samples and the model architecture. Generally, with a small amount of training data, 10 percent to 20 percent is appropriate for validation. If there is a large amount of training data, such as several thousand samples, a lower percentage such as 2 percent to 5 percent of the data is appropriate for validation. The default value is 10.

- Optionally, check the Stop when model stops improving parameter.

When checked, the model training stops when the model is no longer improving regardless of the Max Epochs value specified. The default is checked.

- Optionally, check the Freeze Model parameter.

This parameter specifies whether the backbone layers in the pretrained model will be frozen, so that the weights and biases remain as originally designed. If you check this parameter, the backbone layers are frozen, and the predefined weights and biases are not altered in the Backbone Model parameter. If you uncheck this option, the backbone layers are not frozen, and the weights and biases of the Backbone Model parameter value can be altered to fit the training samples. This takes more time to process but typically produces better results. The default is checked.

- Click Run to start the training.

The following image shows the Train Deep Learning Model pane with Single Shot Detector (Object detection) as the Model Type parameter value.

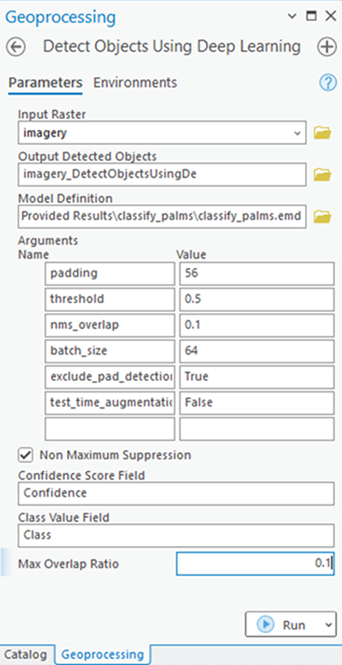

Perform inferencing

To perform inferencing, you use the resulting deep learning model to analyze new data. Inferencing is the process in which information learned during the deep learning training process is used to detect similar features in the datasets. You will use the Detect Objects Using Deep Learning tool since you are performing an object detection workflow.

- Open the Detect Objects Using Deep Learning tool.

- For Input Raster, browse to and select the input raster data within which you want to find objects.

The input can be a single raster dataset, multiple rasters in a mosaic dataset or an image service, a folder of images, or a feature class with image attachments.

- For Output Detected Objects, type the name of the output feature class.

This output feature class will contain geometries circling the objects detected in the input image.

- Specify the Model Definition value (*.emd or *.dlpk).

This is the model file that contains the training output. This is the output of the train deep learning section.

- The Arguments parameter is populated from the Model Definition parameter value. These arguments will vary, depending on which model architecture is being used. In this example, the Single Shot Detector model is used, so the following arguments are populated. You can accept the default values or edit them.

These arguments will vary, depending on the model architecture being used. In this example, the Single Shot Detector model is used, so the following arguments are populated. You can accept the default values or edit them.

- padding—The number of pixels at the border of image tiles from which predictions are blended for adjacent tiles. Increase the value to smooth the output, which reduces artifacts. The maximum value of the padding can be half the tile size value.

- threshold—The detections that have a confidence score higher than this amount are included in the result. The allowed values range from 0 to 1.0.

- batch_size—The number of image tiles processed in each step of the model inference. This is dependant on the memory size of the graphics card.

- nms_overlap—The maximum overlap ratio for two overlapping features, which is defined as the ratio of the intersection area over the union area. The default is 0.1.

- exclude_pad_detections—When this is true, the tool filters potentially truncated detections near the edges that are in the padded region of image chips.

- test_time_augmentation—Test time augmentation is performed while predicting. If true, the predictions of flipped and rotated variants of the input image are merged into the final output.

- Optionally, check the Non Maximum Suppression check box..

When this is checked, duplicate objects that are detected are removed from the output, and you must provide information for the following three parameters:

- Confidence Score Field—The field name in the feature class that will contain the confidence scores as output by the object detection method.

- Class Value Field—The name of the class value field in the input feature class.

- Max Overlap Ratio—The maximum overlap ratio for two overlapping features, which is defined as the ratio of intersection area over union area.

- Click Run to start the inferencing.

In the result image below, the detected palm trees are identified with a bounding polygon box.

For a detailed object detection example, see Use deep learning to assess palm tree health.