Available with Image Analyst license.

The Train Deep Learning Model wizard is an assisted workflow to help you train a deep learning model using the training data that you collected. Once you have training data, open the Train Deep Learning Model wizard  on the Imagery tab, under the Deep Learning Tools drop-down menu

on the Imagery tab, under the Deep Learning Tools drop-down menu  .

.

There are three pages for this wizard: Get Started, Train, and Result.

To use the Train Deep Learning model wizard, complete the following steps:

- Click the Imagery tab.

- Click the Deep Learning Tools drop-down menu

, and choose Train Deep Learning Model

, and choose Train Deep Learning Model  .

.The Train Deep Learning Model wizard pane appears.

Get Started

On the Get Started page in the wizard, you need to specify how to train the deep learning model.

- Specify how you want to train the model.

- Set the parameters automatically—The model type, parameters, and hyperparameters are set automatically to build the best model.

- Specify my own parameters—You set the model type, parameters, and hyperparameters to build the model.

- Click the Next button, to move to the Train page.

Train

On the Train page, you set the parameter information for the training. Depending on the option you specified on the Get Started page, the parameters may vary.

- Specify the required parameters.

Input Training Data

The folders containing the image chips, labels, and statistics required to train the model. This is the output from the Export Training Data For Deep Learning tool.

Output Model

(Automatic training)

The output trained model that will be saved as a deep learning package (.dlpk file).

Output Folder

(Manual training)

The output folder location where the trained model will be stored.

- Optionally, set other parameters.

Automatic training parameters

Parameter Description Pretrained Model

A pretrained model that will be used to fine-tune the new model. The input is an Esri model definition file (.emd) or a deep learning package file (.dlpk).

A pretrained model with similar classes can be fine-tuned to fit the new model. The pretrained model must have been trained with the same model type and backbone model that will be used to train the new model.

Total Time Limit (Hours)

The total time limit in hours it will take for AutoDL model training. The default is 2 hours.

Auto DL Mode

Specifies the AutoDL mode that will be used and how intensive the AutoDL search will be.

- Basic—Train all the selected networks without hyperparameter tuning.

- Advanced—Perform hyperparameter tuning on the top two performing models.

Neural Networks

Specifies the architectures that will be used to train the model.

By default, all the networks will be used.

Save Evaluated Models

Specifies whether all evaluated models will be saved.

- Checked—All evaluated models will be saved.

- Unchecked—Only the best performing model will be saved. This is the default.

Manual training parameters

Parameter Description Max Epochs

The maximum number of epochs for which the model will be trained. A maximum epoch of one means the dataset will be passed forward and backward through the neural network one time. The default value is 20.

Pre-trained Model

A pretrained model that will be used to fine-tune the new model. The input is an Esri model definition file (.emd) or a deep learning package file (.dlpk).

A pretrained model with similar classes can be fine-tuned to fit the new model. The pretrained model must have been trained with the same model type and backbone model that will be used to train the new model.

Model Type

Specifies the model type that will be used to train the deep learning model.

For more information about the various model types, see Deep learning model architectures

Model Arguments

The information from the Model Type parameter will be used to populate this parameter. These arguments vary, depending on the model architecture. The supported model arguments for models trained in ArcGIS are described below. ArcGIS pretrained models and custom deep learning models may have additional arguments that the tool supports.

For more information about which arguments are available for each model type, see Deep learning arguments.

Data Augmentation

Specifies the type of data augmentation that will be used.

Data augmentation is a technique of artificially increasing the training set by creating modified copies of a dataset using existing data.

- Default—The default data augmentation parameters and values will be used. The default data augmentation methods included are crop, dihedral_affine, brightness, contrast, and zoom. These default values usually work well for satellite imagery.

- None—No data augmentation will take place.

- Custom—Specify user-defined data augmentation values in the Augmentation Parameters parameter.

- File—Specify fastai transforms for data augmentation of training and validation datasets. These are specified in a .json file named transforms.json, which is in the same folder as the training data. For more information about the different transformations, see fastai vision transforms.

Augmentation Parameters

Specifies the value for each transform in the augmentation parameter.

- rotate—The image will be randomly rotated (in degrees) by a probability (p). If degrees is a range (a,b), a value will be uniformly assigned from a to b. The default value is 30.0; 0.5.

- brightness—The brightness of the image will be randomly adjusted depending on the value of change, with a probability (p). A change of 0 will transform the image to darkest, and a change of 1 will transform the image to lightest. A change of 0.5 will not adjust the brightness. If change is a range (a,b), the augmentation will uniformly assign a value from a to b. The default value is (0.4,0.6); 1.0.

- contrast—The contrast of the image will be randomly adjusted depending on the value of scale with probability (p). A scale of 0 will transform the image to gray scale, and a scale greater than 1 will transform the image to super contrast. A scale of 1 doesn't adjust the contrast. If scale is a range (a,b), the augmentation will uniformly assign a value from a to b. The default value is (0.75, 1.5); 1.0.

- zoom—The image will be randomly zoomed in depending on the value of scale. The zoom value is in the form scale(a,b); p. The default value is (1.0, 1.2); 1.0 in which p is the probability. Only a scale of greater than 1.0 will zoom in on the image. If scale is a range (a,b), it will uniformly assign a value from a to b.

- crop—The image will be randomly cropped. The crop value is in the form size;p;row_pct;col_pct in which p is probability. The position is given by (col_pct, row_pct), with col_pct and row_pct being normalized between 0 and 1. If col_pct or row_pct is a range (a,b), it will uniformly assign a value from a to b. The default value is chip_size;1.0; (0, 1); (0, 1) in which 224 is the default chip size.

Batch Size

The number of training samples to be processed for training at one time.

Increasing the batch size can improve tool performance; however, as the batch size increases, more memory is used.

When not enough GPU memory is available for the batch size set, the tool tries to estimate and use an optimum batch size. If an out of memory error occurs, use a smaller batch size.

Validation %

The percentage of training samples that will be used for validating the model. The default value is 10.

Chip Size

The size of image to train the model. Images will be cropped to the specified chip size. If the image size is less than the parameter value, the image size will be used. The default is 224 pixels.

Resize To

Resizes the image chips. Once a chip is resized, pixel blocks of chip size will be cropped and used for training. This parameter applies to object detection (PASCAL VOC), object classification (labeled tiles), and super-resolution data only.

The resize value is often half the chip size value. If this resize value is less than the chip size value, the resize value is used to create the pixel blocks for training.

Learning Rate

The rate at which existing information will be overwritten with newly acquired information throughout the training process. If no value is specified, the optimal learning rate will be extracted from the learning curve during the training process.

Backbone Model

Specifies the preconfigured neural network that will be used as the architecture for training the new model. This method is known as Transfer Learning.

Additionally, supported convolution neural networks from the PyTorch Image Models (timm) can be specified using timm as a prefix, for example, timm:resnet31 , timm:inception_v4 , timm:efficientnet_b3, and so on.

Monitor Metric

Specifies the metric that will be monitored while checkpointing and early stopping.

Stop when model stops improving

Specifies whether early stopping will be implemented.

- Checked—Early stopping will be implemented, and the model training will stop when the model is no longer improving, regardless of the Max Epochs parameter value specified. This is the default.

- Unchecked—Early stopping will not be implemented, and the model training will continue until the Max Epochs parameter value is reached.

Freeze Model

Specifies whether the backbone layers in the pretrained model will be frozen, so that the weights and biases remain as originally designed.

- Checked—The backbone layers will be frozen, and the predefined weights and biases will not be altered in the Backbone Model parameter. This is the default.

- Unchecked—The backbone layers will not be frozen, and the weights and biases of the Backbone Model parameter can be altered to fit the training samples. This takes more time to process but typically produces better results.

Weight Initialization Scheme

Specifies the scheme in which the weights will be initialized for the layer.

To train a model with multispectral data, the model must accommodate the various types of bands available. This is done by reinitializing the first layer in the model.

- Random—Random weights will be initialized for non-RGB bands, and pretrained weights will be preserved for RGB bands. This is the default.

- Red band—Weights corresponding to the red band from the pretrained model's layer will be cloned for non-RGB bands, and pretrained weights will be preserved for RGB bands.

- All random—Random weights will be initialized for RGB bands as well as non-RGB bands. This option applies only to multispectral imagery.

This parameter is only applicable when multispectral imagery is used in the model.

- Click the Next button to move to the Result page.

Result

The Result page shows the key details of the trained model so you can review them. It also allows you to compare the trained model to other models. Understanding deep learning models is essential before using them in inferencing. Reviewing a model gives you an indication of how it was trained and how it might perform. In many cases, you may have multiple models to compare.

| Item | Description |

|---|---|

Model | Use the Browse button |

Model Type | The name of the model architecture. |

Backbone | The name of the preconfigured neural network that was used as the architecture for the training model. |

Learning Rate | The learning rate used in the training of the neural networks. If you did not specify the value, it will be calculated by the training tool. |

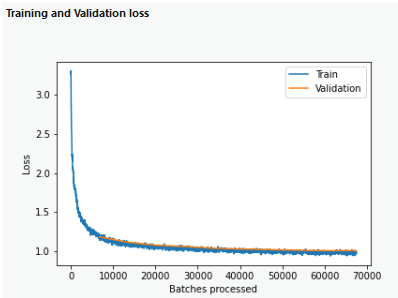

Training and Validation loss | This section displays a graph that shows training loss and validation loss over the course of training the model.

|

Analysis of the model | A metric or number, depending on the model architecture. For example, pixel classification models will display the following metrics for each class: precision, recall, and the f1 score. Object detection models will display the average precision score. |

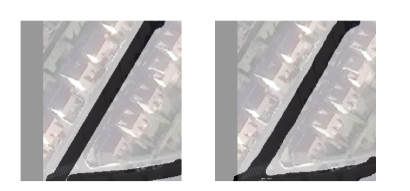

Sample Results | Shows examples of ground reference and predictions pairs.

|

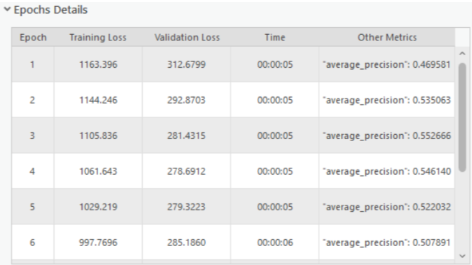

Epochs Details | A table containing information for each epoch, such as training loss, validation loss, time, and other metrics.

|

to find the model that you want to review. All the models associated with it will be added to the

to find the model that you want to review. All the models associated with it will be added to the