| Label | Explanation | Data Type |

Prediction Type

| Specifies the operation mode that will be used. The tool can be run to train a model to only assess performance, predict features, or create a prediction surface.

| String |

Input Training Features

| The feature class containing the Variable to Predict parameter value and, optionally, the explanatory training variables from fields. | Feature Layer |

Variable to Predict

(Optional) | The variable from the Input Training Features parameter value containing the values to be used to train the model. This field contains known (training) values of the variable that will be used to predict at unknown locations. | Field |

Treat Variable as Categorical (Optional) | Specifies whether the Variable to Predict value is a categorical variable.

| Boolean |

Explanatory Training Variables

(Optional) | A list of fields representing the explanatory variables that help predict the value or category of the Variable to Predict value. Check the Categorical check box for any variables that represent classes or categories (such as land cover or presence or absence). | Value Table |

Explanatory Training Distance Features

(Optional) | The feature layer containing the explanatory training distance features. Explanatory variables will be automatically created by calculating a distance from the provided features to the Input Training Features values. Distances will be calculated from each of the features in the Input Training Features value to the nearest Explanatory Training Distance Features values. If the input Explanatory Training Distance Features values are polygons or lines, the distance attributes will be calculated as the distance between the closest segments of the pair of features. | Feature Layer |

Explanatory Training Rasters (Optional) | The explanatory training variables extracted from rasters. Explanatory training variables will be automatically created by extracting raster cell values. For each feature in the Input Training Features parameter, the value of the raster cell is extracted at that exact location. Bilinear raster resampling is used when extracting the raster value for continuous rasters. Nearest neighbor assignment is used when extracting a raster value from categorical rasters. Check the Categorical check box for any rasters that represent classes or categories such as land cover or presence or absence. | Value Table |

Input Prediction Features (Optional) | A feature class representing the locations where predictions will be made. This feature class must also contain any explanatory variables provided as fields that correspond to those used from the training data. | Feature Layer |

Output Predicted Features

(Optional) | The output feature class containing the prediction results. | Feature Class |

Output Prediction Surface (Optional) | The output raster containing the prediction results. The default cell size will be the maximum cell size of the raster inputs. To set a different cell size, use the Cell Size environment setting. | Raster Dataset |

Match Explanatory Variables

(Optional) | A list of the Explanatory Variables values specified from the Input Training Features parameter on the right and corresponding fields from the Input Prediction Features parameter on the left. | Value Table |

Match Distance Features

(Optional) | A list of the Explanatory Distance Features values specified for the Input Training Features parameter on the right and corresponding feature sets from the Input Prediction Features parameter on the left. Explanatory Distance Features values that are more appropriate for the Input Prediction Features parameter can be provided if those used for training are in a different study area or time period. | Value Table |

Match Explanatory Rasters

(Optional) | A list of the Explanatory Rasters values specified for the Input Training Features parameter on the right and corresponding rasters from the Input Prediction Features parameter or the Prediction Surface parameter to be created on the left. The Explanatory Rasters values that are more appropriate for the Input Prediction Features parameter can be provided if those used for training are in a different study area or time period. | Value Table |

Output Trained Features

(Optional) | The explanatory variables used for training (including sampled raster values and distance calculations), as well as the observed Variable to Predict field and accompanying predictions that will be used to further assess performance of the trained model. | Feature Class |

Output Variable Importance Table

(Optional) | The table that will contain information describing the importance of each explanatory variable used in the model. The explanatory variables include fields, distance features, and rasters used to create the model. If the Model type parameter value is Gradient Boosted, importance is measured by gain, weight, and cover, and the table will include these fields. The output will include a bar chart, if the Number of Runs for Validation parameter value is one, or a box plot, if the value is greater than one, of the importance of the explanatory variables. | Table |

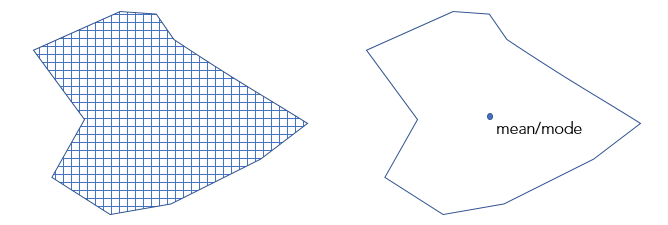

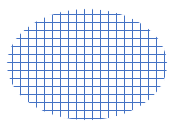

Convert Polygons to Raster Resolution for Training

(Optional) | Specifies how polygons will be treated when training the model if the Input Training Features values are polygons with a categorical Variable to Predict value and only Explanatory Training Rasters values have been provided.

| Boolean |

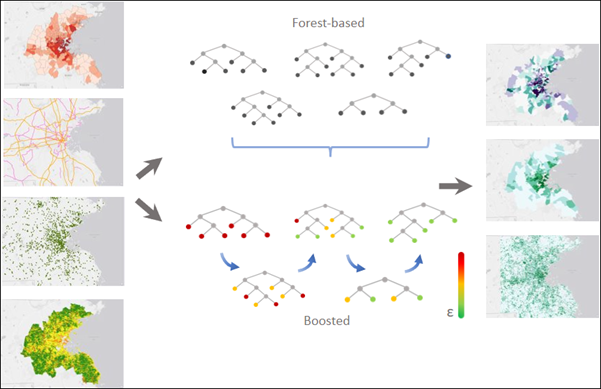

Number of Trees

(Optional) | The number of trees that will be created in the Forest-based and Gradient Boosted models. The default is 100. If the Model Type parameter value is Forest-based, more trees will generally result in more accurate model predictions; however, the model will take longer to calculate. If the Model Type parameter value is Gradient Boosted, more trees may result in more accurate model predictions; however, they may also lead to overfitting the training data. To avoid overfitting the data, provide values for the Maximum Tree Depth, L2 Regularization (Lambda), Minimum Loss Reduction for Splits (Gamma), and Learning Rate (Eta) parameters. | Long |

Minimum Leaf Size

(Optional) | The minimum number of observations required to keep a leaf (that is, the terminal node on a tree without further splits). The default minimum for regression is 5 and the default for classification is 1. For very large data, increasing these numbers will decrease the run time of the tool. | Long |

Maximum Tree Depth

(Optional) | The maximum number of splits that will be made down a tree. Using a large maximum depth, more splits will be created, which may increase the chances of overfitting the model. If the Model type parameter value is Forest-based, the default is data driven and depends on the number of trees created and the number of variables included. If the Model type parameter value is Gradient Boosted, the default is 6. | Long |

Data Available per Tree (%)

(Optional) | The percentage of the Input Training Features values that will be used for each decision tree. The default is 100 percent of the data. Samples for each tree are taken randomly from two-thirds of the data specified. Each decision tree in the forest is created using a random sample or subset (approximately two-thirds) of the training data available. Using a lower percentage of the input data for each decision tree decreases the run time of the tool for very large datasets. | Long |

Number of Randomly Sampled Variables

(Optional) | The number of explanatory variables that will be used to create each decision tree. Each decision tree in the forest-based and gradient boosted models is created using a random subset of the specified explanatory variables. Increasing the number of variables used in each decision tree will increase the chances of overfitting the model, particularly if there is one or more dominant variables. The default is to use the square root of the total number of explanatory variables (fields, distances, and rasters combined) if the Variable to Predict value is categorical or to divide the total number of explanatory variables (fields, distances, and rasters combined) by 3 if the Variable to Predict value is numeric. | Long |

Training Data Excluded for Validation (%)

(Optional) | The percentage (between 0 percent and 50 percent) of the Input Training Features values that will be reserved as the test dataset for validation. The model will be trained without this random subset of data, and the model predicted values for those features will be compared to the observed values. The default is 10 percent. | Double |

Output Classification Performance Table (Confusion Matrix)

(Optional) | A confusion matrix that summarizes the performance of the model created on the validation data. The matrix compares the model predicted categories for the validation data to the actual categories. This table can be used to calculate additional diagnostics that are not included in the output messages. This parameter is available when the Variable to Predict value is categorical and the Treat as Categorical parameter is checked. | Table |

Output

Validation Table (Optional) | A table that contains the R2 for each model if the Variable to Predict value is not categorical, or the accuracy of each model if the value is categorical. This table includes a bar chart of the distribution of accuracies or the R2 values. This distribution can be used to assess the stability of the model. This parameter is available when the Number of Runs for Validation value is greater than 2. | Table |

Compensate for Sparse Categories

(Optional) | Specifies whether each category in the training dataset, regardless of its frequency, will be represented in each tree. This parameter is available when the Model Type parameter value is Forest-based.

| Boolean |

Number of Runs for Validation

(Optional) | The number of iterations of the tool. The distribution of R-squared values (continuous) or accuracies (categorical) of all the models can be displayed using the Output Validation Table parameter. If the Prediction Type parameter value is Predict to raster or Predict to features, the model that produced the median R-squared value or accuracy will be used to make predictions. Using the median value helps ensure stability of the predictions. | Long |

Calculate Uncertainty

(Optional) | Specifies whether prediction uncertainty will be calculated when training, predicting to features, or predicting to raster. This parameter is available when the Model Type parameter value is Forest-based.

| Boolean |

Output Trained Model File

(Optional) | An output model file that will save the trained model, which can be used later for prediction. | File |

Model Type

(Optional) | Specifies the method that will be used to create the model.

| String |

L2 Regularization (Lambda)

(Optional) | A regularization term that reduces the model's sensitivity to individual features. Increasing this value will make the model more conservative and prevent overfitting the training data. If the value is 0, the model becomes the traditional Gradient Boosting model. The default is 1. This parameter is available when the Model Type parameter value is Gradient Boosted. | Double |

Minimum Loss Reduction for Splits (Gamma)

(Optional) | A threshold for the minimum loss reduction needed to split trees. Potential splits are evaluated for their loss reduction. If the candidate split has a higher loss reduction than this threshold value, the partition will occur. Higher threshold values avoid overfitting and result in more conservative models with fewer partitions. The default is 0. This parameter is available when the Model Type parameter value is Gradient Boosted. | Double |

Learning Rate (Eta)

(Optional) | A value that reduces the contribution of each tree to the final prediction. The value should be greater than 0 and less than or equal to 1. A lower learning rate prevents overfitting the model; however, it may require longer computation times. The default is 0.3. This parameter is available when the Model Type parameter value is Gradient Boosted. | Double |

Maximum Number of Bins for Searching Splits (Optional) | The number of bins that the training data will be divided into to search for the best splitting point. The value cannot be 1. The default is 0, which corresponds to the use of a greedy algorithm. A greedy algorithm will create a candidate split at every data point. Providing too few bins for searching is not recommended because it will lead to poor model prediction performance. This parameter is available when the Model Type parameter value is Gradient Boosted. | Long |

Optimize Parameters

(Optional) | Specifies whether an optimization method will be used to find the set of hyperparameters that achieve optimal model performance.

| Boolean |

Optimization Method

(Optional) | Specifies the optimization method that will be used to select and test search points to find the optimal set of hyperparameters. Search points are combinations of hyperparameters within the search space specified by the Model Parameter Setting parameter. This option is available when the Optimization Parameters parameter is checked.

| String |

Optimize Target (Objective)

(Optional) | Specifies the objective function or value that will be minimized or maximized to find the optimal set of hyperparameters.

| String |

Number of Runs for Parameter Sets

(Optional) | The number of search points within the search space specified by the Model Parameter Setting parameter that will be tested. This parameter is available when the Optimization Method value is Random Search (Quick) or Random Search (Robust). | Long |

Model Parameter Setting

(Optional) | A list of hyperparameters and their search spaces. Customize the search space of each hyperparameter by providing a lower bound, upper bound, and interval. The lower bound and upper bound specify the range of possible values for the hyperparameter. The following is the range of valid values for each hyperparameter:

| Value Table |

Output Parameter Tuning Table

(Optional) | A table that contains the parameter settings and objective values for each optimization trial. The output includes a chart of all the trials and their objective values. This option is available when Optimize Parameters is checked. | Table |

Include All Prediction Probabilities (Optional) | For categorical variables to predict, specifies whether the probability of every category of the categorical variable or only the probability of the record's category will be predicted. For example, if a categorical variable has categories A, B, and C, and the first record has category B, use this parameter to specify whether the probability for categories A, B, and C will be predicted or only the probability of category B will be predicted for the record.

| Boolean |

Derived Output

| Label | Explanation | Data Type |

| Output Uncertainty Raster Layers | When the Calculate Uncertainty parameter is checked, the tool will calculate a 90 percent prediction interval around each predicted value of the Variable to Predict parameter. | Raster Layer |