To improve the performance and scalability of feature overlay tools such as Union and Intersect, operational logic called adaptive subdivision processing is used. The use of this logic is triggered when data cannot be processed within the available amount of physical memory. To stay in the bounds of physical memory, which greatly improves performance, processing is done incrementally on subdivisions of the original extent. Features that straddle the edges of these subdivisions (also called tiles) are split at the edge of the tile and reassembled into a single feature during the last stage of processing. The vertices introduced at these tile edges will remain in the output features. Tile boundaries also may be left in the output feature class when a feature being processed is so large the subdivision process is unable to put the feature back to its original state using the available memory.

Why subdivide the data?

The overlay analysis tools perform best when processing can be done within your machine's available memory (free memory not being used by the system or other applications). This may not always be possible when working with datasets that contain a large number of features, very complex features with complex feature interaction, or features containing hundreds of thousands or millions of vertices (or a combination of all three of these). Without the use of tiling, available memory would be quickly exhausted and the operating system would start to page (use of secondary storage as if it were main memory). Paging leads to performance degradation and at some point the system would simply run out of resources and the operation would fail. Tiling helps avoid this as much as possible. In extreme cases the processing memory management will be handed over to the system in an attempt to complete the processing by allowing the operating system to use all the resources at its disposal in order to complete the process.

What do the tiles look like?

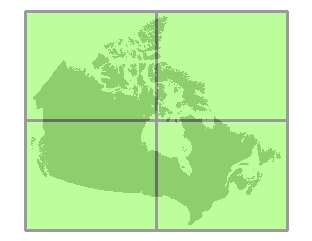

Every process starts with a single tile that spans the entire extent of the data. If the data in the single tile is too large to be processed in physical memory, it is subdivided into four equal tiles. Processing then begins on a subtile, which is further subdivided if the data in this second level of tiles is again too large. This continues until the data within each tile can be processed within physical memory. See the example below:

The footprint of all the input features

The process begins with a tile that spans the entire extent of all datasets. For reference, this is called tile level 1.

If the data is too large to process in memory, the level 1 tile is subdivided into four equal tiles. These four subtiles are called level 2 tiles.

Based on the size of data in each tile, some tiles are further subdivided, while others are not.

Which tools use subdivisions

The following tools from the Analysis Tools toolbox have subdivision logic when dealing with large data:

- Buffer (when using dissolve option)

- Clip

- Erase

- Identity

- Intersect

- Split

- Symmetrical Difference

- Union

- Update

These tools from the Data Management toolbox also use subdivision logic when dealing with large datasets:

- Dissolve

- Feature To Line

- Feature To Polygon

- Polygon To Line

Process fails with an out of memory error

The subdivision approach may not help in processing extremely large features (features with many millions of vertices). Splitting and reassembling extremely large features multiple times across tile boundaries is very costly in terms of memory, and may cause out of memory errors if the feature is too large. This is determined by how much available memory (free memory not being used by the system or other applications) is available on the machine running the process. Some large features may cause an out of memory error on one machine configuration and not on another. The out of memory error can occur on the same machine one time and not another depending on the resources being used by other applications. Examples of very large features with many vertices are road casings for an entire city or a polygon representing a complex river estuary.

The out of memory error could also happen if a second application or geoprocessing tool is run while a tool is processing. This second process could use a portion of the available memory the subdivision process thought was available for use, thereby causing the subdivision process to demand more memory than is actually available. It is recommended that no other operations be performed on a machine while processing large datasets.

One recommended technique is to use the Dice tool to divide large features into smaller features before processing.

What data format is recommended when working with large data?

Personal geodatabases and shapefiles are limited in size to 2 gigabytes (GB). If a process' output will exceed this size, failures can occur. Enterprise and file geodatabases do not have this limitation so they are recommended as the output workspace when processing very large datasets. For enterprise geodatabases, see the database administrator for details on data loading policies. Performing unplanned/unapproved large data loading operations may be restricted.