Available with Geostatistical Analyst license.

Before you decide on an interpolation model for decision-making, you should investigate how well the model can predict values at new locations. However, if you don't know the true values at the locations between the measured points, how can you know whether your model is predicting the values accurately and reliably? Answering this question seems to require knowing the values of locations that you haven't sampled. However, there is a common and widely used method for assessing interpolation accuracy and reliability: cross validation.

Cross validation

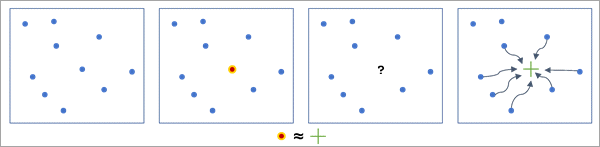

Cross validation is a leave-one-out resampling method that first uses all input points to estimate the parameters of an interpolation model (such as the semivariogram of kriging or the power value of inverse distance weighting). It then removes a single input point and uses the remaining points to predict the value at the location of the hidden point, and the predicted value is compared to the measured value. The hidden point is then added back to the dataset, and a different point is hidden and predicted. This process repeats through all input points.

The diagram below shows the cross validation process for a single point. After estimating the interpolation model from all blue points, the value of the red point is hidden, and the remaining points are used to predict the value of the hidden point. The prediction is then compared to the measured value. This process repeats for all 10 points.

Cross validation is effective at assessing interpolation models because it simulates predicting values at new unmeasured locations, but the values of the locations are not unmeasured, only hidden, so the predicted values can be validated against their known values. If the model can accurately predict the values of the hidden points, it should also be able to accurately predict the values at new unmeasured locations. If, however, the cross validation errors are very large, you should also expect large errors when predicting to new locations.

Cross validation is performed automatically while building an interpolation model, and results are shown on the last page of the Geostatistical Wizard. Cross validation can also be calculated on an existing geostatistical layer using the Cross Validation tool. If a geostatistical layer is in a map, you can view the cross validation statistics by either right-clicking the layer and choosing Cross Validation or clicking the Cross Validation button in the Data ribbon menu for the geostatistical layer.

However, cross validation has the disadvantage that it initially uses all input points to estimate the interpolation model parameters before sequentially hiding each point. Because all points contributed to the estimation of the interpolation parameters, they were not entirely hidden in the procedure. Individual points generally do not greatly influence the estimated values of the interpolation parameters; however, for small datasets and datasets containing outliers, even a single point can significantly change the estimates of interpolation parameters. To entirely hide the values of points and avoid any double-use of the data, you can use validation.

Validation

Validation is similar to cross validation, except that it first removes an entire subset of the input points, called the test dataset. It then uses the remaining points, called the training dataset, to estimate the parameters of the interpolation model. The interpolation model then predicts to all locations of the test dataset, and the validation errors are calculated for each test point. Because the test dataset was not used in any way to estimate the interpolation parameters or make predictions, validation is the most rigorous way to estimate how accurately and reliably the interpolation model will predict to new locations with unknown values. However, validation has the large disadvantage that you cannot use all your data to build the interpolation model, so the parameter estimates may not be as accurate and precise as they would be if you had used all the data. Because of the requirement to reduce the size of your dataset, cross validation is usually preferred to validation unless the data is oversampled.

You can create the test and training datasets using the Subset Features tool. After building an interpolation model (geostatistical layer) on the training dataset, you can perform validation using the GA Layer To Points tool. Provide the geostatistical layer created from the training dataset, predict to the test dataset, and validate on the field used to interpolate. The validation errors and other validation statistics are saved in the output feature class.

The rest of this topic will discuss only cross validation, but all concepts are analogous for validation.

Cross validation statistics

When performing cross validation, various statistics are calculated for each point. These statistics can be viewed on the Table tab of the cross validation dialog box or saved in a feature class using the Cross Validation tool. The following fields are created for each point:

- Measured—The measured value of the hidden point.

- Predicted—The predicted value from cross validation at the location of the hidden point.

- Error—The difference between the predicted and measured values (predicted minus measured). A positive error means that the prediction was larger than the measured value, and a negative error means the prediction was less than the measured value.

- Standard Error—The standard error of the predicted value. If the errors are normally distributed, approximately two-thirds of measured values will fall within one standard error of the predicted value, and approximately 95 percent will fall within two standard errors.

- Standardized Error—The error divided by the standard error. To use the quantile or probability output types, standardized error values should follow a standard normal distribution (mean equal to zero and standard deviation equal to one).

Additionally, for models in the empirical Bayesian kriging family, the following cross validation statistics are available:

- Continuous Ranked Probability Score—A positive number measuring the accuracy and precision of the predicted value, where a smaller value is better. The value is difficult to interpret on its own, but the closer the error is to zero and the smaller the standard error, the smaller the continuous ranked probability score. At its core, the statistic measures a distance (not a typical geographic distance) between the measured value and the predictive distribution, which accounts for both prediction and standard error accuracy. Narrow predictive distributions centered around the measured value (error close to zero and small standard error) will have continuous ranked probability scores close to zero. The value is measured in squared data units, so it should not be compared across datasets with different units or ranges of values.

- Validation Quantile—The quantile of the measured value with respect to the predictive distribution. If the model is correctly configured, the validation quantiles will be uniformly distributed between 0 and 1 and show no patterns. The validation quantiles of misconfigured models often cluster in the middle (most values near 0.5) or at the extremes (most values near 0 or 1).

- Inside 90 Percent Interval—An indicator (1 or 0) of whether the measured value is within a 90 percent prediction interval (analogous to a confidence interval). If the model is correctly configured, approximately 90 percent of points will fall inside the interval and have the value 1.

- Inside 95 Percent Interval—An indicator (1 or 0) of whether the measured value is within a 95 percent prediction interval. If the model is correctly configured, approximately 95 percent of points will fall inside the interval and have the value 1.

Note:

Interpolation methods that do not support the prediction standard error output type can only calculate the measured, predicted, and error values.

Cross validation summary statistics

The individual cross validation statistics for each hidden point provide detailed information about the performance of the model, but for large numbers of input points, the information needs to be summarized to quickly interpret what it means for your interpolation result. Cross validation summary statistics can be seen on the Summary tab of the cross validation dialog box and are printed as messages by the Cross Validation tool. The following summary statistics are available:

Note:

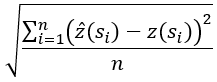

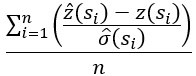

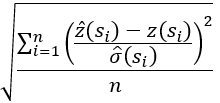

In all formulas, n is the number of points. si is the location of the hidden point. z(si) is the measured value at the location. z-hat(si) is the predicted value. σ-hat is the standard error of the predicted value.

- Mean Error—The average of the cross validation errors. The value should be as close to zero as possible. The mean error measures model bias, where a positive mean error indicates a tendency to predict values that are too large, and a negative mean error indicates a tendency to underpredict the measured values. The statistic is in the units of the data values.

- Root Mean Square Error—The square root of the average squared prediction errors. This value should be as small as possible. The statistic measures prediction accuracy, and the value approximates the average deviation of the predicted values from the measured values. The value is in the units of the data values. For example, for temperature interpolation in degrees Celsius, a root mean square error value of 1.5 means that the predictions differed from the measured values by approximately 1.5 degrees, on average.

- Mean Standardized Error—The average of the standardized errors (error divided by standard error). The value should be as close to zero as possible. The statistic measures model bias on a standardized scale so that it is comparable across datasets with different values and units.

- Average Standard Error—The quadratic average (root mean square) of the standard errors. This statistic measures model precision, a tendency to produce narrow predictive distributions closely centered around the predicted value. The value should be as small as possible but also approximately equal to the root mean square error.

- The average is performed on the cross validation variances (standard errors squared) because variances are additive, but standard errors are not.

- Root Mean Square Standardized Error—The root mean square of the standardized errors. This statistic measures the accuracy of the standard errors by comparing the variability of the cross validation errors to the estimated standard errors. The value should be as close to one as possible. Values less than one indicate that the estimated standard errors are too large, and values greater than one indicate they are too small. The value can be interpreted as an inverse ratio; for example, a value of three means that the standard errors are one-third of the values they should be, on average. Similarly, a value of 0.5 means that the standard errors are double the values they should be.

Additionally, for models in the empirical Bayesian kriging family, the following cross validation summary statistics are also available:

- Average CRPS—The average of the continuous ranked probability score (CRPS) values. The value should be as small as possible. For a model to have a low average CRPS, the predictions and standard errors must both be estimated accurately and precisely.

- Inside 90 Percent Interval—The percent of measured values that fall within a 90 percent prediction interval. The value should be close to 90. This statistic measures whether the standard errors are consistent with the predicted values. Values above 90 indicate that the standard errors are too large, relative to the predicted values. Values below 90 indicate the standard errors are too small.

- Inside 95 Percent Interval—The percent of measured values that fall within a 95 percent prediction interval. The value should be close to 95. This statistic measures whether the standard errors are consistent with the predicted values.

Note:

Interpolation methods that do not support the prediction standard error output type can only calculate the mean error and root mean square error statistics.

Interpolation model comparison

Cross validation can be used to assess the quality of a single geostatistical model, but another common application is to compare two or more candidate models to determine which one you will use in your analysis. If the number of candidate models is small, you can explore them using multiple cross validation dialog boxes. Aligning the dialog boxes side by side allows you to see all results at the same time and dive deeply into the details of each model.

However, for a large number of candidate models or when model creation is automated, the Compare Geostatistical Layers tool can be used to automatically compare and rank the models using customizable criteria. You can rank the models based on a single criterion (such as lowest root mean square error or mean error closest to zero), weighted average ranks of multiple criteria, or hierarchical sorting of multiple criteria (where ties by each criteria are broken by subsequent criteria in the hierarchy). Exclusion criteria can also be used to exclude interpolation results from the comparison that do not meet minimal quality standards. The Exploratory Interpolation tool also performs these same cross validation comparisons, but it generates the geostatistical layers automatically from a dataset and field. This tool can be used to quickly determine which interpolation methods perform best for your data without having to perform each of them individually.

Cross validation charts

The cross validation pop-up dialog box provides various charts to visualize and explore the cross validation statistics interactively. The chart section of the dialog box contains five main tabs that each show a different chart.

The Predicted tab displays the predicted values versus measured values in a scatterplot with a blue regression line fit to the data. Since the predicted values should be equal to the measured values, a reference line is provided to see how closely the regression line falls to this ideal. However, in practice, the regression line usually has a steeper slope than the reference line because interpolation models (especially kriging) tend to smooth the data values, underpredicting large values and overpredicting small values.

Note:

The Regression function value below the plot is calculated using a robust regression procedure. This procedure first fits a standard linear regression line to the scatterplot. Next, any points that are more than two standard deviations above or below the regression line are removed, and a new regression equation is calculated. This procedure ensures that a small number of outliers will not bias the estimates of the slope and intercept. All points are shown in the scatterplot, even if they are not used to estimate the regression function.

The Error and Standardized Error tabs are similar to the predicted tab, but they plot the cross validation errors and standardized errors versus the measured values. In these plots, the regression line should be flat, and the points should show no patterns. However, in practice, the slopes are usually negative due to smoothing.

The Normal QQ Plot tab displays a scatterplot of the standardized errors versus the equivalent quantile of a standard normal distribution. If the cross validation errors are normally distributed and the standard errors are estimated accurately, the points in the plot should all fall close to the reference line. Reviewing this plot is most important when using the quantile or probability output types because they require normally distributed errors.

The Distribution tab shows the distributions of the cross validation statistics (estimated using kernel density). Use the Field drop-down menu to change the displayed statistic. A particularly useful option (shown in the image below) is to overlay the distributions of the measured and predicted values on the same chart to see how closely they align. These two distributions should look as similar as possible; however, the predicted distribution will usually be taller and narrower than the measured distribution due to smoothing.

Cross validation statistics interpretation in context

A common misconception about cross validation and other model validation procedures is that they are intended to determine if the model is correct for the data. In truth, models are never correct for data collected from the real world, but they don't need to be correct to provide actionable information for decision-making. This concept is summarized by the well-known quote from George Box (1978): "All models are wrong, but some are useful." Think of cross validation statistics as means to quantify the usefulness of a model, not as a checklist to determine whether a model is correct. With the many available statistics (individual values, summary statistics, and charts), it's possible to look too closely to find problems and deviations from ideal values and patterns. Models are never perfect because models never perfectly represent the data.

When reviewing cross validation results, it is important to remember the goals and expectations of your analysis. For example, suppose you are interpolating temperature in degrees Celsius to make public health recommendations during a heat wave. In this scenario, how should you interpret a mean error value of 0.1? Taken literally, it means that the model has positive bias and tends to overpredict temperature values. However, the average bias is only one-tenth of a degree, which is likely not large enough to be relevant to public health policy. On the other hand, a root mean square error value of 10 degrees means that, on average, the predicted values were off by 10 degrees from the true temperatures. This model would likely be too inaccurate to be useful because differences of 10 degrees would elicit very different public health recommendations.

Another important consideration is whether you intend to create confidence intervals or margins of error for the predicted values. For example, predict a temperature value of 28 degrees, plus or minus two degrees. If you do not intend to create margins of error, the statistics related to standard error are less important because their primary purpose is to determine the accuracy of the margins of error. While problems with standard error accuracy can cause problems for the predicted values in some cases, it is common for interpolation models to predict accurately but estimate margins of error inaccurately.

Interpolation models have the most difficulty modeling extreme values, the largest and smallest values of a dataset. Interpolation models make predictions using weighted averages of measured values in the neighborhood of the prediction location. By averaging over data values, predictions are pulled toward the average value of the neighboring points, a phenomenon called smoothing. To varying degrees, smoothing is present in almost all interpolation models, and it can be seen in the slopes of the various cross validation charts. You should seek to minimize smoothing, but in practice, you should be most skeptical of the predictions in areas near the largest and smallest data values.

Finally, your expectations for the cross validation results should depend on the quality and volume of the data. If there are few points or large distances between the points, you should expect the cross validation statistics to reflect the limited information available from the points. Even with a properly configured model, cross validation errors will still be large if there is not enough information available from the dataset to make accurate predictions. Analogously, with large amounts of informative and representative data, even poorly configured models with inaccurate parameters can still produce accurate and reliable predictions.