An oriented imagery table defines metadata for a collection of images. It is used as an input to the Add Images To Oriented Imagery Dataset geoprocessing tool.

The oriented imagery table can be used for adding images in the following instances:

- The image file format is a supported image format except for JPEG or JPG.

- The image metadata is stored separately from the image files.

- The image metadata needs preprocessing prior to oriented imagery dataset inclusion.

- You want to use the image orientations defined by the Matrix, or Omega, Phi, and Kappa fields.

Note:

If any of the optional fields in the oriented imagery table has the same value for all images, and the field is an oriented imagery dataset property, a value for the field can be defined in the oriented imagery dataset properties. For example, if all images use the same elevation source, then the elevation source can be defined as an oriented imagery dataset property, assigned a value, and removed from the oriented imagery table. All the images will use the same value for the elevation source.

Oriented imagery table fields

The fields supported in the oriented imagery table are listed below. When a field is defined in the oriented imagery table, it takes precedence over the duplicate definition in the oriented imagery dataset properties. Additional fields in the oriented imagery table can be included in the output oriented imagery dataset if Include all fields from input table is selected in the Add Images To Oriented Imagery Dataset tool.

Supported fields in the oriented imagery table

| Field name | Field type | Data type | Description |

|---|---|---|---|

X | Required | Numeric | The x-coordinate of the camera location in the ground coordinate system. The units are the same as the coordinate system units defined in the SRS field. |

Y | Required | Numeric | The y-coordinate of the camera location in the ground coordinate system. The units are the same as the coordinate system units defined in the SRS field. |

Z | Optional | Numeric | The z-coordinate of the camera location in the ground coordinate system. The z-coordinate units must be consistent with the x- and y-coordinate units and can be defined by the vertical coordinate system. The z-coordinate is typically expressed as orthometric heights (elevation above sea level). This is appropriate when the input digital elevation model (DEM ) is also using orthometric heights. |

SRS | Optional | String | The coordinate system of the camera location as a well-known ID (WKID) or a definition string (well-known text [WKT]). If a value for SRS is not explicitly defined, the spatial reference of the input oriented imagery dataset is assigned. |

ImagePath | Required | String | The path to the image file. This can be a local path or a web-accessible URL. Images can be in JPEG, JPG, TIF, or MRF format. |

Name | Optional | String | An alias name that identifies the image. |

AcquisitionDate | Optional | Date | The date when the image was collected. The time of the image collection can also be included. |

CameraHeading | Optional * | Numeric | The camera orientation of the first rotation around the z-axis of the camera. The value is in degrees. The direction of measurement for heading is in positive clockwise direction, where north is defined as zero degrees. When the image orientation is unknown, -999 is used. |

CameraPitch | Optional * | Numeric | The camera orientation of the second rotation around the x-axis of the camera. The value is in degrees. The direction of measurement for heading is in positive counterclockwise direction. The CameraPitch is zero degrees when the camera is facing straight down to the ground. The valid range of CameraPitch values is from 0 through 180 degrees. CameraPitch is 180 degrees for a camera facing straight up, and 90 degrees for a camera facing horizontally. |

CameraRoll | Optional * | Numeric | The camera orientation of the final rotation around the z-axis of the camera in the positive clockwise direction. The unit is degrees. Valid values range from -90 to 90. |

CameraHeight | Optional | Numeric | The height of the camera above the ground (elevation source). It is used to determine the visible extent of the image in which large values result in a greater view extent. The unit is meters. Values assigned must be greater than zero. |

HorizontalFieldOfView | Optional | Numeric | The camera’s field-of-view in a horizontal direction. The unit is degrees. The valid values range from 0 through 360. |

VerticalFieldOfView | Optional | Numeric | The camera’s field-of-view in the vertical direction. The unit is degrees. The valid values range from 0 through 180. |

NearDistance | Optional | Numeric | The nearest usable distance of the imagery from the camera position. This value determines the near plane of the visible frustum in a 3D scene. The unit is meters. |

FarDistance | Optional | Numeric | The farthest usable distance of the imagery from the camera position. This value determines the far plane of the visible frustum. The intersection of the frustum far plane with the ground defines the footprint, which is used to determine whether an image is returned when you click the map. The unit is meters. Values must be greater than zero. |

CameraOrientation | Optional | String | Stores detailed camera orientation parameters as a pipe-separated string. This field provides support for more accurate image-to-ground and ground-to-image transformations. |

OrientedImageryType | Optional | String | The imagery type is specified from the following:

|

ImageRotation | Optional | Numeric | The orientation of the camera in degrees relative to the scene when the image was captured. The valid values range from -360 through 360. The value is used to rotate the view of the image in the oriented imagery viewer to ensure the top of the image is up. |

Matrix | Optional * | String | The row-wise sorted rotation matrix that defines the transformation from image space to map space, specified as nine floating-point values, delimited by semicolons. Period or full-stop must be the decimal separator for all the values. |

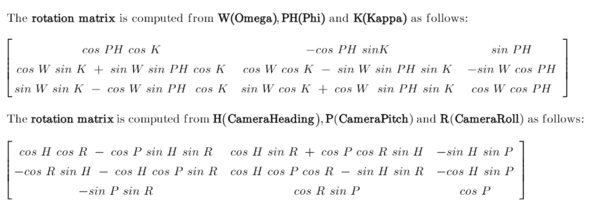

Omega | Optional * | Numeric | The rotational angle of the camera's x-axis. The unit is decimal degrees. |

Phi | Optional * | Numeric | The rotational angle of the camera's y-axis. The unit is decimal degrees. |

Kappa | Optional * | Numeric | The rotational angle of the camera's z-axis. The unit is decimal degrees. |

FocalLength | Optional * | Numeric | The focal length of the camera lens. The unit can be microns, millimeters, or pixels. |

PrincipalX | Optional | Numeric | The x-coordinate of the principal point of the autocollimation. The unit must be the same as the unit used for FocalLength. By default, the value is zero. |

PrincipalY | Optional | Numeric | The y-coordinate of the principal point of the autocollimation. The unit must be the same as the unit used for FocalLength. By default, the value is zero. |

Radial | Optional | String |

The radial distortion is specified as a set of three semicolon-delimited coefficients, such as 0;0;0 for K1;K2;K3. The coupling unit is the same as the unit specified for FocalLength. A common approach in computer vision applications is to provide coefficients without mentioning the coupling unit. In such cases, use the equations below to convert the coefficients. f is the FocalLength value and K1_cv, K2_cv, and K3_cv are the computer vision parameters: |

Tangential | Optional | String |

The tangential distortion is specified as a set of two semicolon-delimited coefficients, such as 0;0 for P1;P2. The coupling unit is the same as the unit used for FocalLength. A common approach in computer vision applications is to provide coefficients without mentioning the coupling unit. In such cases, use the following equations to convert the coefficients. f is the FocalLength value and P1_cv and P2_cv are the computer vision parameters: |

A0,A1,A2 B0,B1,B2 | Optional * | Numeric |

The coefficient of the affine transformation that establishes a relationship between the sensor space and image space. The direction is from ground to image. A0, A1, and A2 represent the translation in x direction. B0, B1, and B2 represent the translation in y direction. If the values are not provided, use the following equations to compute the values : |

Note:

Optional *—The camera's Exterior Orientation in an oriented imagery dataset is determined by the CameraHeading, CameraPitch, and CameraRoll values.

If CameraHeading, CameraPitch, and CameraRoll are not explicitly defined, but the value for Matrix is defined, the missing field values are computed from Matrix.If the values for Omega, Phi, and Kappa are defined, the values for Matrix, CameraHeading, CameraPitch, and CameraRoll will be computed. It is mandatory to follow the sequence Omega, Phi, and Kappa when specifying the values.

Note:

Optional *—The defined FocalLength value will be used only if the affine values (A0,A1,A2 and B0,B1,B2) are also defined. Otherwise, FocalLength will be computed in pixels from the image size and camera field of views.