Available with Standard or Advanced license.

Available for an ArcGIS organization with the ArcGIS Reality license.

In ArcGIS Reality for ArcGIS Pro, you can use photogrammetry to correct drone imagery to remove geometric distortions caused by the sensor, platform, and terrain displacement. After removing these distortions, you can generate Reality mapping products.

First, you will set up a Reality mapping workspace to manage the drone imagery collection. Next, you will perform a block adjustment, followed by a refined adjustment using ground control points. Finally, you'll generate a digital surface model (DSM), true ortho, and 2D DSM mesh.

ArcGIS Reality for ArcGIS Pro requires information about the camera, including focal length and sensor size, as well as the location at which each image was captured. This information is commonly stored as metadata in the image files, typically in the EXIF header. It is also helpful to know the GPS accuracy. In the case of drone imagery, this should be provided by the drone manufacturer. The GPS accuracy for the sample dataset in this tutorial is better than 5 meters.

License:

The following are required to complete this tutorial:

- ArcGIS Reality Studio

- ArcGIS Pro 3.1, or later

- ArcGIS Reality for ArcGIS Pro Setup

Create a Reality mapping workspace

A Reality mapping workspace is an ArcGIS Pro subproject that is dedicated to Reality mapping workflows. It is a container in an ArcGIS Pro project folder that stores the resources and derived files that belong to a single image collection in a Reality mapping task.

A collection of 195 drone images is provided for this tutorial. The GCP folder contains the Tut_Ground_Control_UTM11N_EGM96.csv file, which contains ground control points (GCPs), and a file geodatabase (GCP_desc.gdb) with a Ground_Control_NAD832011_NAVD88 point feature class identifying the GCP locations at the site.

To create a Reality mapping workspace, complete the following steps:

- Download the tutorial dataset. unzip it, and save contents to C:\SampleData\RM_Drone_tutorial.

- Unzip the package in the C:\SampleData\Aerial Imagery directory.

- In ArcGIS Pro, create a project using the Map template and sign in to your ArcGIS Online account if necessary.

- Increase the processing speed of block adjustment.

- On the Analysis tab, click Environments.

- Locate the Parallel Processing parameter in the Environments window and change the value to 90%.

- On the Imagery tab, in the Reality Mapping group, click the New Workspace drop-down menu and choose New Workspace.

- In the Workspace Configuration window, type a name for the workspace.

- Ensure that Workspace Type is set to Reality Mapping.

- Click the Sensor Data Type drop-down menu, and choose Drone.

- Ensure that Scenario Type is set to Drone.

- Click the Basemap drop-down menu, and choose Topographic.

- Accept all other default values and click Next.

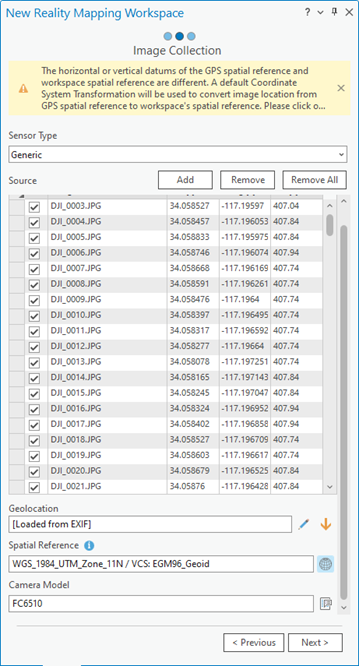

The Image Collection window appears.

- In the Image Collection window, click the Sensor Type drop-down menu, and choose Generic.

This option is used because the imagery was collected with an RGB camera.

- Click Add, browse to the tutorial data location, and select the Images folder.

- Ensure that the Spatial Reference and Camera Model values are correct.

The default projection for the workspace is based on the latitude, longitude, and altitude of the images. This projection determines the spatial reference for the ArcGIS Reality for ArcGIS Pro products. For this dataset, you’ll use WGS84_UTM_Zone_11N for the horizontal coordinate system and EGM96_Geoid for the vertical projection.

- Click the Spatial Reference button

.

.The Spatial Reference dialog box appears.

- Click in the box under Current Z.

- In the search box, type EGM96 Geoid and press Enter.

- Click OK to accept the changes and close the Spatial Reference dialog box.

- Click Next.

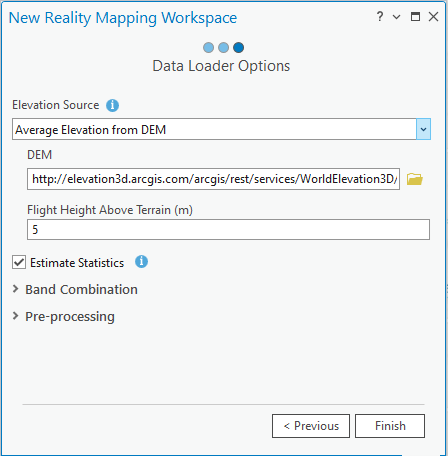

- Accept all the default settings in the Data Loader Options window and click Finish.

- If you have access to the internet, the Elevation Source value is derived from the World Elevation Service. This provides an initial estimate of the flight height for each image.

- If you do not have access to the internet or a DEM, choose Constant Elevation from the Elevation Source drop-down menu and enter an elevation value of 414 meters.

- The parameter, Flight Height Above Terrain (m), refers to the image exclusion height, where images having a flight height above terrain that is less than this value will not be included in the workspace.

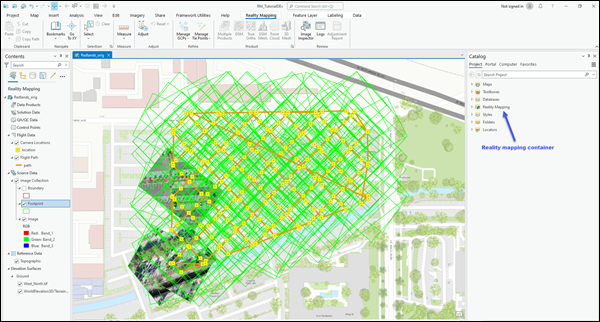

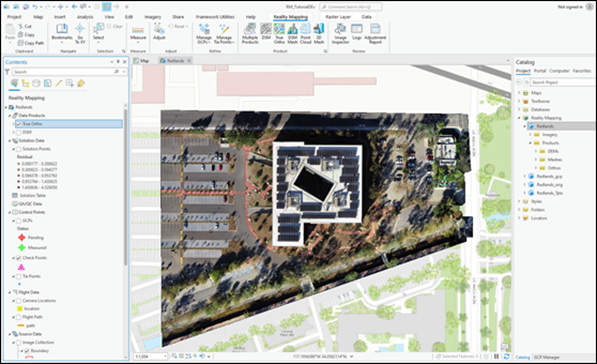

Once the workspace has been created, the images, drone path, and image footprints are displayed. A Reality mapping container is added to the Contents pane, where the source imagery data and derived Reality mapping products will be stored.

The initial display of imagery in the workspace confirms that all images and necessary metadata were provided to initiate the workspace. The images have not been aligned or adjusted, so the mosaic will not look correct.

Note:

Most modern drones store GPS information in the EXIF header. This will be used to automatically populate the table shown below. However, some older systems or custom-built drones may store GPS data in an external file. In this case, you can use the Import button next to the Geolocation parameter to import external GPS files.

next to the Geolocation parameter to import external GPS files.Perform a block adjustment

After the Reality mapping workspace has been created, the next step is to perform a block adjustment using the tools in the Adjust and Refine groups. The block adjustment first calculates tie points, which are common points in areas of image overlap. The tie points are then used to calculate the orientation of each image, known as exterior orientation in photogrammetry. The block adjustment process may take a few hours depending on your computer setup and resources.

To perform a block adjustment, complete the following steps:

- On the Reality Mapping tab, in the Adjust group, click Adjust

.

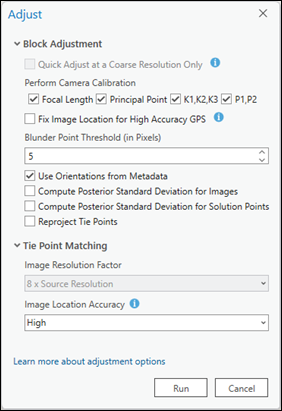

. - In the Adjust window, under Block Adjustment ,uncheck Quick Adjust at Coarse Resolution Only.

When this option is checked, an approximate adjustment is performed at a coarse, user-defined resolution. When this option is not checked, tie points are first computed at a coarse resolution, followed by a refined adjustment at image source resolution.

- Check the Perform Camera Calibration options..

This indicates that the input focal length is approximate and that the lens distortion parameters will be calculated during adjustment. For drone imagery, these options are checked by default, since most drone cameras have not been calibrated. This option should not be checked for high-quality cameras with known calibration.

The camera self-calibration requires that an image collection has in-strip overlap of more than 60 percent and cross-strip overlap of more than 30 percent.

- Uncheck Fix Image Location for High Accuracy GPS.

This option is used only for imagery acquired with differential GPS, such as Real Time Kinematic (RTK) or Post Processing Kinematic (PPK).

- For Blunder Point Threshold, choose 5 from the drop-down menu using the value selector arrows.

Tie points with a residual error greater than this value will not be used in computing the adjustment. The unit of measure is pixels.

- Check the Use Orientations from Metadata check box.

This reduces the adjustment duration using the exterior orientation information embedded in the image EXIF as initial values.

- Leave the box next to Reproject Tie Points unchecked.

- Under Tie Point Matching, choose 8 x Source Resolution from the Image Resolution Factor drop-down menu.

This parameter defines the resolution at which tie points are calculated. Larger values allow the adjustment to run more quickly. The default value is suitable for most imagery that includes a diverse set of features.

- For Image Location Accuracy, choose High from the drop-down menu.

GPS location accuracy indicates the accuracy level of the GPS data collected concurrently with the imagery and listed in the corresponding EXIF data file. This is used in the tie point calculation algorithm to determine the number of images in the neighborhood to use. The High value is used for GPS accuracy from 0 to 10 meters.

- Click Run.

After the adjustment has been performed, the Logs file displays statistical information such as the mean reprojection error (in pixels), which signifies the accuracy of adjustment, the number of images processed, and the number of tie points generated.

The relative accuracy of the images is also improved, and derived products can be generated using the options in the Product category. To improve the absolute accuracy of generated products, GCPs must be added to the block.

Add GCPs

GCPs are points with known x,y,z ground coordinates, which are often obtained from ground survey, that are used to ensure that the photogrammetric process has reference points on the ground. A block adjustment can be applied without GCPs and still ensure relative accuracy, although adding GCPs increases the absolute accuracy of the adjusted imagery.

Import GCPs

To import GCPs, complete the following steps:

- On the Reality Mapping tab, in the Refine group, click Manage GCPs.

The GCP Manager window appears.

- In the GCP Manager window, click the Import GCPs button

.

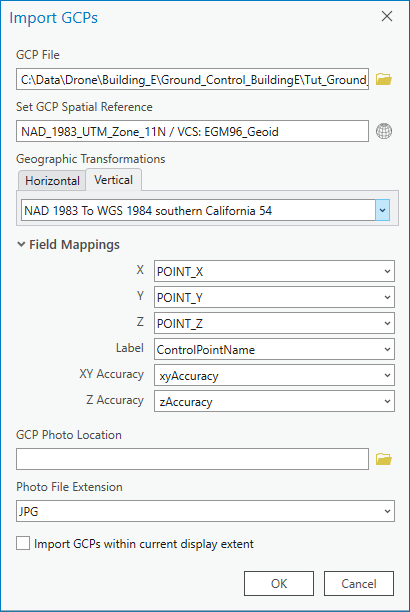

. - In the Import GCPs window, under GCP File, browse to and select the Tut_Ground_Control_UTM11N_EGM96.csv file, and click OK.

- Under Set GCP Spatial Reference, click the Spatial Reference button

and do the following in the Spatial Reference window:

and do the following in the Spatial Reference window: - For Current XY, expand Projected, UTM, and NAD83, and choose NAD 1983 UTM Zone 11N.

- To define the vertical coordinate system, click in the box below Current Z, expand Vertical Coordinate System, Gravity-related, and World, and choose EGM96 Geoid.

- Click OK to accept the changes and close the Spatial Reference window.

- Under Geographic Transformations, click the Horizontal tab and choose WGS 1984 (ITRF00) to NAD83 from the drop-down list.

- Click the Vertical tab and choose None from the drop-down menu.

- Map the GCP fields accordingly under Field Mappings.

- Click OK to import the GCPs.

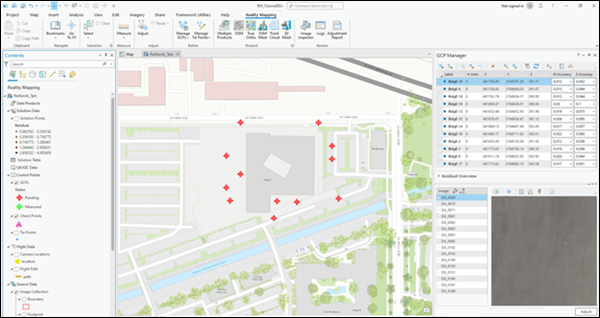

The imported GCPs are listed in the GCP Manager window, and the relative locations are displayed in the 2D Map view with a red position indicator

.

.

Add tie points for selected GCPs

To add tie points, complete the following steps:

- Add the control point file from the file geodatabase in the tutorial data location to the 2D map view.

- In the Catalog pane, browse to the file by clicking SampleData > RM_Drone_Tutorial > GCP > GCP_desc.gdb, right-click the file Ground_Control_NAD832011_NAVD88, and choose Add To Current Map.

- In the Contents pane, right-click Ground_Control_NAD832011_NAVD88 and click Label. If the points are not labeled with the GCP control point name, do the following:

- With the feature class selected, on the ArcGIS Pro main menu, click Labeling.

- In the Label Class category, for Field, choose Control Point Name from the drop-down list.

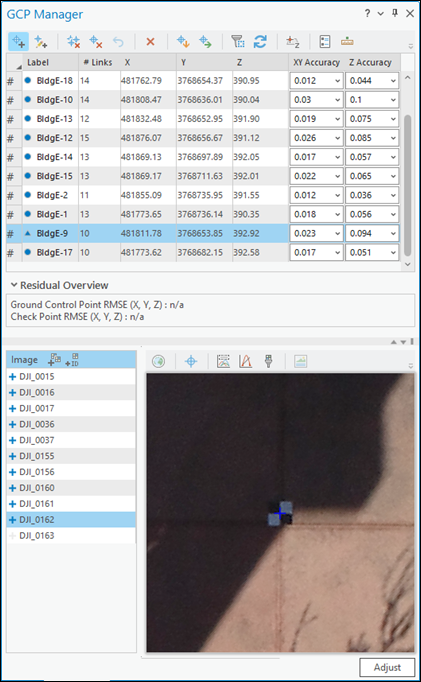

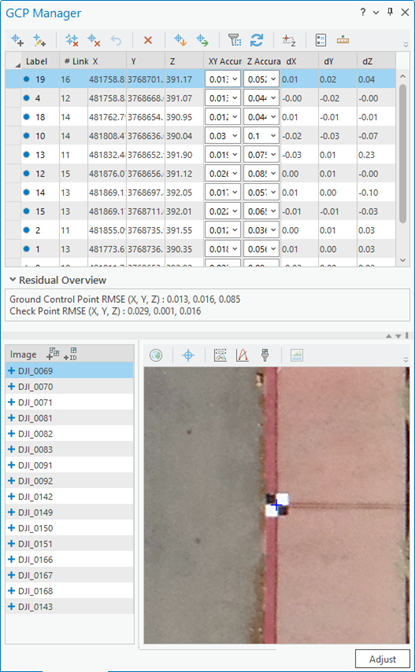

Once the GCPs are imported, the table in the GCP Manager becomes populated.

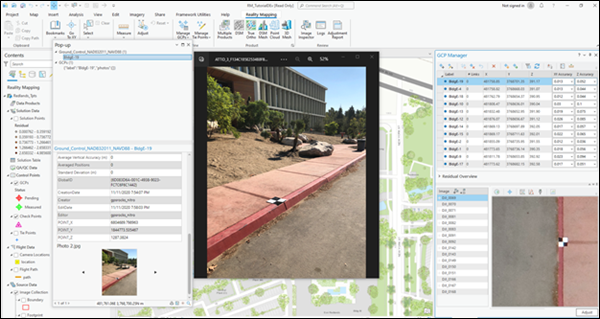

- In the GCP Manager window, click the BldgE-19 GCP, and do the following to see the exact location of the GCP:

- Click the point labeled with the same name in the 2D map view, and scroll to the bottom of the pop-up window.

- If the pop-up window is covering the GCP Manager window, drag the pop-up to a different location in the map window.

- To view a larger scale GCP image, clicking the image.

- If the enlarged GCP image covers the entire window, click the Restore Down button.

- Click the Add GCP or Tie Point button

in the GCP Manager window to accurately measure GCPs in the image viewer for each image.

in the GCP Manager window to accurately measure GCPs in the image viewer for each image. The tie points for other images are automatically calculated by the image matching algorithm when possible, indicated by a blue cross next to the image ID. Review each tie point for accuracy. If the tie point is not automatically identified, add the tie point manually by selecting the appropriate location in the image.

- Click a single GCP listed in the upper panel of the GCP manager, do the measurement steps described below, and repeat the process for all GCPs.

A list of images containing the GCP will be displayed in the lower panel of the GCP Manager pane.

- Click on each image listed, and reposition the GCP displayed in the image viewer on the exact location of the surveyed image panel target visible in the image.

Zoom and pan in the image window by right clicking in the image window while scrolling the mouse roller.

- After each GCP has been added and measured with tie points, select BldgE-19, right-click, and change it to a check point.

This provides a measure of the absolute accuracy of the adjustment, as check points are not used in the adjustment process.

- After adding GCPs and check points, click Adjust to run the adjustment again to incorporate these points.

Review adjustment results

Adjustment quality results can be viewed in the GCP Manager window by analyzing the residuals for each GCP. Residuals represents the difference between the measured position and the computed position of a point. They are measured in the units of the project's spatial referencing system. After completing adjustment with GCPs, three new fields—dX, dY, and dZ—are added to the GCP Manager table and display the residuals for each GCP. The quality of the fit between the adjusted block and the map coordinate system can be evaluated using these values. The root mean square error (RMSE) of the residuals can be viewed by expanding the Residual Overview section of the GCP Manager window.

Additional adjustment statistics are provided in the adjustment report. To generate the report, on the Reality Mapping tab, in the Review group, click Adjustment Report.

Generate Reality mapping products

Once the block adjustment is complete, 2D and 3D imagery products can be generated using the tools in the Product group on the Reality Mapping tab. Multiple products can be generated simultaneously using the Reality Mapping Products wizard or individually by selecting the applicable product tool from the Product group. The types of products to be generated depends on various factors including the sensor, data flight configuration, and scenario type. The flight configuration of the sample dataset is nadir, which is ideal for 2D products such as DSM, true ortho, and DSM mesh.

Note:

In this tutorial, two approaches to generate derived products are outlined below. One approach uses the Multiple Products wizard and the second uses the individually named product wizards listed in the Product group. It is recommended that you follow one or the other workflow approach, since doing both workflows is not required for this tutorial.

Generate multiple products using the Reality Mapping Products wizard

The Reality Mapping Products wizard guides you through the workflow to create one or multiple Reality mapping products in a single process. The products that can be generated using the Reality Mapping Products wizard are DSM, true ortho, DSM mesh, point cloud, and 3D mesh. All generated products are stored in product folders of the same name under the Reality Mapping category in the Catalog pane.

To generate products using the Reality Mapping Products wizard, complete the following steps:

- On the Reality Mapping tab, click the Multiple Products button in the Product group.

The Reality Mapping Products Wizard window appears.

- In the Product Generation Settings pane, uncheck the 3D check box.

In this tutorial, only 2D products are generated.

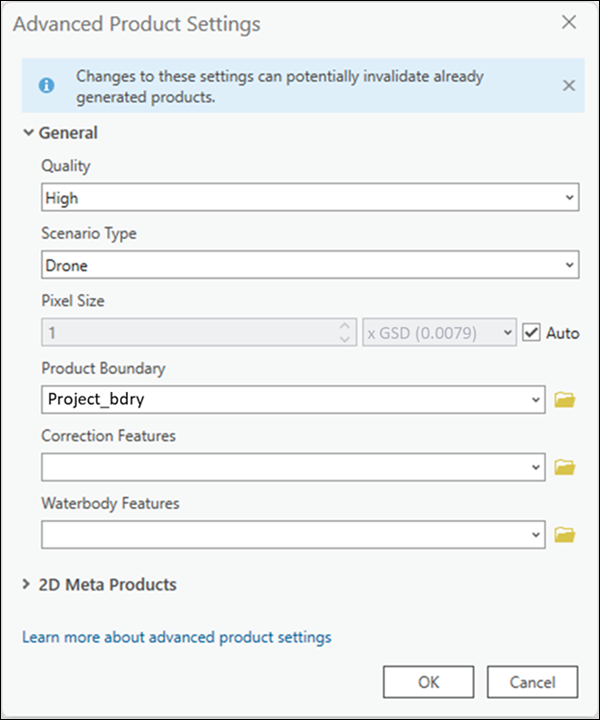

- Click the Shared Advanced Settings button.

The Advanced Products Settings dialog box appears, where you can define parameters that will impact the Reality mapping products to be generated. For details of the advanced product settings, see Reality Advanced Settings in the wizard.

The Quality and Scenario values are automatically set and should not be changed to ensure optimum performance and product quality.

- Accept the Pixel Size default settings to generate products at source image resolution.

- For Product Boundary, click the Browse button

, browse to the file geodatabase in the tutorial data location, select Project_bdry, and click OK.

, browse to the file geodatabase in the tutorial data location, select Project_bdry, and click OK.It is recommended that you provide a product boundary for the following reasons:

- Define the proper output extent—When you do not define a product boundary, the application automatically defines an extent based on various dataset parameters that may not match the project extent.

- Reduce processing time—If the required product extent is smaller than the image collection extent, defining a product boundary reduces the processing duration and automatically clips the output to the boundary extent.

- Accept all the other default values and click OK.

The Advanced Products Settings dialog box closes, and you return to the Product Generation Settings pane in the Reality Mapping Products wizard.

- Click Next to go to the DSM Settings pane, and ensure that the parameter values match the following:

- Output Type—Mosaic

- Format—Cloud Raster Format

- Compression—None

- Resampling—Bilinear

- Click Next to go to the True Ortho Settings pane, and ensure that the parameter values match the following:

- Output Type—Mosaic

- Format—Cloud Raster Format

- Compression—None

- Resampling—Bilinear

- Click Next to go to the DSM Mesh Settings pane, and ensure that the parameter values match the following:

- Format—SLPK

- Texture—JPG & DDS

- Click Finish to start the product generation process.

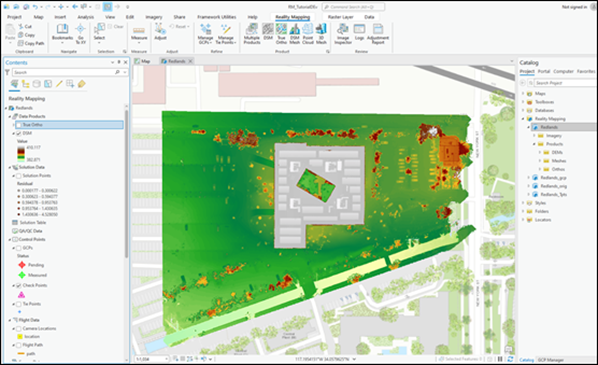

Once product generation is complete, the DSM and true ortho products are automatically added to the 2D map view. In the Catalog pane, in the Reality Mapping container, DSM is added to the DEM folder, the DSM mesh products are added to the Meshes folder, and the true ortho products are added to the Ortho folder.

You have completed the generation of 2D products including a DSM, true ortho and DSM mesh using the Multiple Products wizard. You can also generate these 2D products using wizards for each specific product, as described below.

Generate a DSM

To generate a DSM using the Reality Mapping Products wizard, complete the steps below.

Note:

Elevation values can be derived when the image collection has a good amount of overlap to form the stereo pairs. Typical image overlap necessary to produce point clouds is 80 percent forward overlap along a flight line and 60 percent overlap between flight lines.- On the Reality Mapping tab, click the DSM button

in the Product group.

in the Product group.The Reality Mapping Products Wizard window appears.

- Click Shared Advanced Settings.

The Advanced Product Settings dialog box appears, where you can define parameters that will impact the Reality mapping products to be generated. For details of the advanced product settings, see Shared Advanced Settings in the wizard.

The Quality and Scenario values are automatically set and should not be changed to ensure optimum performance and product quality. However, if you want to generate a reduced resolution product, the Quality value can be lowered. See Shared Advanced Settings for more information about the impact of various quality settings on product generation.

- Accept the Pixel Size default values to generate products at source image resolution.

- For Product Boundary, select a feature class identifying the output product extent from the drop-down list, or click the Browse button

, browse to the file geodatabase in the tutorial data location, select Project_bdry, and click OK.

, browse to the file geodatabase in the tutorial data location, select Project_bdry, and click OK. It is recommended that you provide a product boundary for the following reasons:

- Define the proper output extent—When you do not define a product boundary, the application automatically defines an extent based on various dataset parameters that may not match the project extent.

- Reduce processing time—If the required product extent is smaller than the image collection extent, defining a product boundary reduces the processing duration and automatically clips the output to the boundary extent.

- Accept all the other default values and click OK.

The Advanced Products Settings dialog box closes and you return to the Products Generation Settings pane in the Reality Mapping Products wizard.

- Click Next to go to the DSM Settings pane, and ensure that the parameter values match the following;

- Output Type—Mosaic

- Format—Cloud Raster Format

- Compression—None

- Resampling—Bilinear

- Click Finish to start the product generation process.

Once processing is complete, the DSM product is added to the Contents pane, the Data Products category, and the 2D map view. It is also added to the Catalog pane, the Reality Mapping container, and the DEMs folder.

Generate a true ortho

One of the options in the Reality mapping Product group is True Ortho. A true ortho is an orthorectified image with no perspective distortion so that above-ground features do not lean and obscure other features. To create a true ortho, a DSM derived from the adjusted block of overlapping images is required. As a result, a DSM will be generated as a part of the true ortho process regardless of whether a DSM was previously selected as a product. The generated true ortho image will be stored in the Orthos folder under the Reality Mapping category in the Catalog pane.

To generate a true ortho using the Reality Mapping Products wizard, complete the following steps:

- On the Reality Mapping tab, click the True Ortho button

in the Product group.

in the Product group.The Reality Mapping Products Wizard window appears.

- Click Shared Advanced Settings.

The Advanced Product Settings dialog box appears, where you can define parameters that will impact the Reality mapping products that you generate. For a detailed description of the advanced product settings, see Shared Advanced Settings in the wizard.

- Complete steps 4 through 6 in the Generate multiple products using the Reality Mapping Products wizard section above.

- Click Next to go to the True Ortho Settings pane, and ensure that the parameter values match the following:

- Output Type—Mosaic

- Format—Cloud Raster Format

- Compression—None

- Resampling—Bilinear

- Click Finish to start the product generation process.

Once processing is complete, the True Ortho product is added to the Contents pane, the Data Products category, and the 2D map view. It is also added to the Catalog pane, the Reality Mapping container, and the Ortho folder.

Generate a DSM mesh

A DSM mesh is a 2.5D textured model of the project area where the adjusted images are draped on a triangulated irregular network (TIN) version of the DSM extracted from the overlapping images in the adjusted block. The Reality Mapping Products wizard simplifies the creation of the DSM mesh product by providing a streamlined workflow with preconfigured parameters. To create a DSM mesh product, a DSM derived from the adjusted block of overlapping images is required. As a result, a DSM is generated as a part of the DSM mesh generation process whether a DSM was selected as a separate product.

To generate a DSM mesh using Reality Mapping Products wizard, complete the following steps:

- On the Reality Mapping tab, click the DSM Mesh button

in the Product group.

in the Product group.The Reality Mapping Products Wizard window appears.

- Click Shared Advanced Settings.

The Advanced Product Settings dialog box appears, where you can define parameters that will impact the Reality mapping products that you generate. For a detailed description of the advanced product settings, see Shared Advanced Settings in the wizard.

- Complete steps 4 through 7 in the Generate multiple products using the Reality Mapping Products wizard section above.

- Click Next to go to the DSM Mesh Settings pane, and ensure that the parameter values match the following:

- Format—SLPK

- Texture—JPG & DDS

- Click Finish to start the product generation process.

Once processing is complete, the DSM mesh product is added to the Catalog pane, the Reality Mapping container, and the Meshes folder.

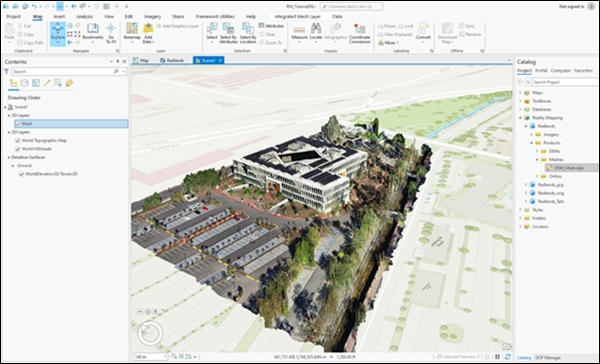

- To visualize the generated DSM mesh product, right-click the 3D_Mesh.slpk file in the Meshes folder, and from the drop-down menu, click Add to New > Local Scene.

Summary

In this tutorial, you created a Reality mapping workspace for drone imagery and used tools on the Reality Mapping tab to apply a photogrammetric adjustment with ground control points. You then used tools in the Products group to generate DSM, true ortho, and DSM mesh products. For more information about Reality mapping, see the following topics:

- Reality mapping in ArcGIS Pro

- Create a Reality mapping workspace for drone imagery

- Adjustment options for Reality mapping drone imagery

- Add ground control points to a Reality mapping workspace

- Generate a DSM using ArcGIS Reality for ArcGIS Pro

- Generate a True Ortho using ArcGIS Reality for ArcGIS Pro

- Generate a DSM mesh using ArcGIS Reality for ArcGIS Pro

The imagery used in this tutorial was acquired and provided by Esri, Inc.