Available with Standard or Advanced license.

Available for an ArcGIS organization with the ArcGIS Reality license.

In ArcGIS Pro, you can correct digital aerial imagery collected by a professional mapping camera using photogrammetry to remove geometric distortions caused by the sensor and correct for terrain displacement. After correcting these effects, you can generate Reality mapping products.

In this tutorial, you will set up a Reality mapping workspace to manage an aerial imagery collection. You will perform a block adjustment and review the results. Then you'll generate point cloud and 3D mesh products.

Computing the photogrammetric solution for aerial imagery is determined by its exterior orientation (EO), which represents a transformation from the ground to the camera, and its interior orientation (IO), which represents a transformation from camera to image. Required EO parameters include perspective center (x,y,z) coordinates, and omega, phi, and kappa angles are provided in a frames table. Interior orientation parameters include focal length, pixel size, principal point, and lens distortion. This information is available in the camera calibration report associated with the imagery and must be provided in a camera table.

License:

The following licenses are required to complete this tutorial:

- ArcGIS Reality Studio

- ArcGIS Pro 3.1 or later

- ArcGIS Reality for ArcGIS Pro extension setup

Create a Reality mapping workspace

A Reality mapping workspace is an ArcGIS Pro subproject that is dedicated to Reality mapping workflows. It is a container in an ArcGIS Pro project folder that stores the resources and derived files that belong to a single image collection.

A collection of digital aerial images is provided for this tutorial. The tutorial data also contains frame and camera tables.

To create a Reality mapping workspace, complete the following steps:

- Download the tutorial dataset and save it to C:\SampleData\Aerial Imagery.

- Unzip the package in the C:\SampleData\Aerial Imagery directory.

If you save the data to a different location on your computer, update the path in each of the entries in the Oblique_FramesCam.csv file.

- In ArcGIS Pro, create a project using the Map template and sign in to your ArcGIS Online account.

- On the Imagery tab, in the Reality Mapping group, click the New Workspace drop-down menu and choose New Workspace.

- In the Workspace Configuration window, provide a name for the workspace.

- Set Workspace Type to Reality Mapping.

- In the Sensor Data Type drop-down menu, choose Aerial - Digital.

- Set Scenario Type to Oblique.

This setting is recommended when working with a combination of oblique and nadir imagery.

- In the Basemap drop-down menu, choose Topographic.

- Set the Parallel Processing Factor value of the workspace to 90%.

Setting the Parallel Processing Factor value to 90% means that 90% of total CPU cores will be used to support Reality mapping processing. The Parallel Processing Factor value can also be changed in Properties in the Workspace group in the Imagery tab after the workspace is created.

Setting the Parallel Processing Factor value higher than what your system can accommodate will lead to parallel processing failures. A common requirement is that for each logical processor, you need 2GB of RAM. For example, if you are working with a system that has 6 cores, 12 logical processors, and 16 GB RAM, setting the parallel processing factor to 100 percent would require a minimum 24 GB of RAM for the process to run successfully. A more appropriate Parallel Processing Factor value based on this example would be 50 percent. which would require approximately 12 GB of RAM.

- Accept all the other default values and click Next.

- In the Image Collection window, under Exterior Orientation File / Esri Frames Table, browse to the tutorial data folder on your computer and the Nadir_Oblique_FramesCam.csv frames table file.

This table, which contains the frames and cameras information, specifies parameters that are used to compute both the IO and EO for the camera and the imagery. In the block adjustment process, these approximate values are refined for greater accuracy.

Ensure that the data paths listed in the raster column in the frame table file match the location of the image files on your computer.

- Under Cameras, click the Import button

, browse to the tutorial data folder on your computer, and select the Nadir_Oblique_FramesCam.csv file.

, browse to the tutorial data folder on your computer, and select the Nadir_Oblique_FramesCam.csv file. - Ensure that the Spatial Reference and camera model values are correct.

The default projection for the workspace is defined based on the imagery. This projection must match the coordinates used in the frames table, and it determines the spatial reference for the Reality products you create. For this dataset, you’ll use the projection defined in the frames and cameras table: XY – NAD83 2011 StatePlane California V FIPS 0405, VCS NAVD88 (meters).

- Accept the other default values and click Next.

- In the Data Loader Options window, under DEM, click the browse button, browse to the tutorial data folder on your computer, and select the DEM_USGS_1m.tif file.

- Accept the other default values and click Finish.

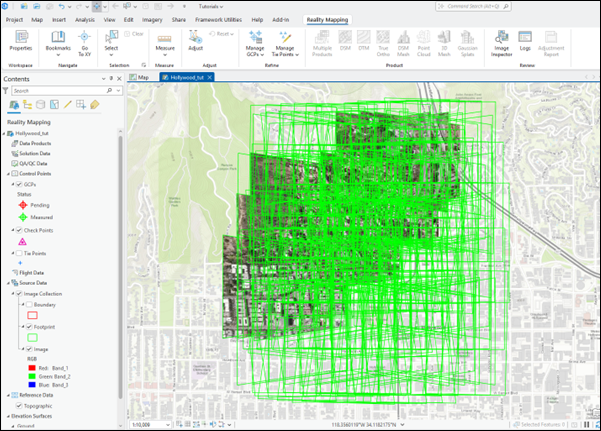

Once you create the workspace, the images and image footprints display. The Reality Mapping category has also been added to the Contents pane. The source imagery data and derived Reality mapping products will be referenced here.

The initial display of imagery in the workspace confirms that all images and necessary metadata were provided to initiate the workspace. The images have not been adjusted, so the alignment is only approximate at this time, and the mosaic may not look correct.

Perform a block adjustment

After you create a Reality mapping workspace, the next step is to perform a block adjustment using the tools in the Adjust and Refine groups. The block adjustment will calculate tie points, which are common points in areas of image overlap. The tie points will then be used to calculate the orientation of each image, known as exterior orientation in photogrammetry.

To perform a block adjustment, complete the following steps:

- On the Reality Mapping tab, in the Adjust group, click Adjust

.

. - Ensure that all the Perform Camera Calibration options are checked.

This indicates that the input focal length and lens distortion parameters are approximate and will be calculated during adjustment.

- Expand the Advanced Options section.

- Expand Tie Point Matching section.

- Check the Full Pairwise Matching box.

This option leverages Scale-Invariant Feature Transform (SIFT) algorithm to improve correlation accuracy when processing imagery with a high variability in scale, overlap, or low-quality initial orientation parameters.

- Set the Tie Point Similarity to High.

- Accept the default values for all the other settings, and click Run.

- After the adjustment is complete, turn on the Tie Points layer in the Contents pane to view the distribution of generated tie points on the map.

You can view the tie point residuals and accuracy reporting in the logs file.

- On the Reality Mapping tab, in the Review group, click Logs View

to access this file.

to access this file.

The units for tie point RSME is pixels.

Review the adjustment results

After performing a block adjustment, review the adjustment results and assess the quality of the adjustment. On the Reality Mapping tab, in the Review group, click Adjustment Report to generate adjustment statistics. The adjustment report provides a record of the adjustment and overall quality measures of the process.

Tip:

Using ground control points (GCPs) to improve the absolute accuracy is a best practice. However, GCPs were not available and are not included in this adjustment.

Generate Reality mapping products

Once the block adjustment is complete, 2D and 3D imagery products can be generated using the tools in the Product group on the Reality Mapping tab. Multiple products can be generated simultaneously using the Reality Mapping Products wizard or individually by selecting the applicable product tool from the Product group. The types of products that can be generated depends on various factors including the sensor, data flight configuration, and scenario type. The flight configuration of the sample dataset is a combination of oblique and nadir, which is ideal for 3D products such as point clouds and 3D meshes.

Note:

In this tutorial, two approaches to generate derived products are described below. One approach uses the Multiple Products wizard and the second uses the individually named product wizards listed in the Product group. It is recommended that you follow one or the other workflow approach, since doing both workflows is not required for this tutorial.

Generate products using the Reality Mapping Multiple Products wizard

The Reality Mapping Products wizard guides you through the workflow to create one or multiple Reality mapping products in a single process. Based on the flight configuration of the sample dataset, the products that can be generated using the Reality Mapping Multiple Products wizard are a point cloud and 3D mesh. All generated products are stored in product folders of the same name under the Reality Mapping category in the Catalog pane.

To generate products using the Reality Mapping Multiple Products wizard, complete the following steps:

- On the Reality Mapping tab, click the Multiple Products button in the Product group.

The Reality Mapping Products Wizard window appears. In the Product Generation Settings pane, uncheck the 2D check box. In this tutorial, only 3D products are generated.

uncheck the 2D check box.

In this tutorial, only 3D products are generated

- Click the Shared Advanced Settings button.

The Advanced Products Settings dialog box appears, where you can define parameters that will impact the Reality mapping products to be generated. For details of the advanced product settings, see Shared Advanced Settings option.

- Ensure that Quality is set to Ultra.

- Ensure that Scenario is set to Oblique to match the image flight configuration.

During initial product generation, the Reality mapping process creates files based on initial product settings. Changing the Quality value after initial product generation adversely impacts processing time and initiates regeneration of previously created files and products.

- Accept the Pixel Size default settings to generate products at source image resolution.

- For Product Boundary, click the Browse button

, browse to the tutorial data location, select Mesh_bdry feature data, and click OK.

, browse to the tutorial data location, select Mesh_bdry feature data, and click OK.It is recommended that you provide a product boundary for the following reasons:

- Define the proper output extent—When you do not define a product boundary, the application automatically defines an extent based on various dataset parameters that may not match the project extent.

- Reduce processing time—If the required product extent is smaller than the image collection extent, defining a product boundary reduces the processing duration and automatically clips the output to the boundary extent.

- For Processing Folder, click the Browse button, navigate to a location on disk that has a minimum available storage of 10 times the total size of the images being processed. In this case, 50 GB or more available storage is recommended.

- Accept all the other default values and click OK.

The Advanced Products Settings dialog box closes, and you return to the Product Generation Settings pane in the Reality Mapping Products wizard.

- Click Next to go to the 3D Mesh Settings pane, and ensure that the parameter values match the following:

- Format—SLPK

- Click Finish to start the product generation process.

Once product generation is complete, the point cloud and 3D mesh products are added to the Reality Mapping container in the Point Cloud and Meshes folders, respectively. A LAS dataset is added to the Contents pane to manage the point cloud.

- To visualize the generated 3D mesh, right-click the 3D_Mesh.slpk file in the Meshes folder, and click Add to New > Local Scene in the drop-down menu.

- To visualize and activate the generated point cloud, click the LAS dataset in the Contents pane to view it in the 2D map.

- To visualize the generated point cloud in a 3D perspective, right-click the LAS dataset in the Contents pane and add it to a local scene or a global scene.

You can end the tutorial now or continue to generate derived products using the individual product options in the Product group. If you continue, previously generated products will be overwritten. To maintain previously created products, follow the instructions in the Create a Reality mapping workspace section above to create a new Reality mapping workspace before proceeding.

Generate a point cloud

A point cloud is a model of the project area defined by high-density, RGB colorized 3D LAS points that are extracted from overlapping images in the block or project.

To generate a point cloud using the Reality Mapping Products wizard, complete the following steps:

- On the Reality Mapping tab, click the 3D Point Cloud button

in the Product group.

in the Product group.The Reality Mapping Products Wizard window appears.

- Click Shared Advanced Settings.

The Advanced Product Settings dialog box appears, where you can define parameters that will impact the Reality mapping products to be generated. For details of the advanced product settings, see Shared Advanced Settings option.

- Complete steps 3 through 8 in the Generate products using the Reality Mapping Multiple Products wizard section above.

- Click Finish to start the point cloud generation process.

Once product generation is complete, the point cloud product is added to the Catalog pane and the Reality Mapping container in the Point Cloud folder, and a LAS dataset is added to the Contents pane to manage the point cloud.

- To visualize and activate the generated point cloud, click the LAS dataset in the Contents pane to view it in the 2D map.

- To visualize the generated point cloud in a 3D perspective, right-click the LAS dataset in the Contents pane and add it to a local scene or a global scene.

Generate a 3D mesh

A 3D mesh is 3D textured model of the project area where the ground and above-ground feature facades are densely and accurately reconstructed. The 3D mesh can be viewed from any angle to get a realistic and accurate depiction of the project area.

To generate a 3D mesh using the Reality Mapping Products wizard, complete the following steps:

- On the Reality Mapping tab, click the 3D Mesh button

in the Product group.

in the Product group.The Reality Mapping Products Wizard window appears.

- Click Shared Advanced Settings.

The Advanced Product Settings dialog box appears, where you can define parameters that will impact the Reality mapping products that you generate. For a detailed description of the advanced product settings, see Shared Advanced Settings option.

- Complete steps 3 through 8 in the Generate products using the Reality Mapping Multiple Products wizard section above.

- Click Next to go to the 3D Mesh Settings pane, and ensure that the parameter values match the following:

- Format—SLPK

- Click Finish to start the 3D mesh generation process.

Once processing is complete, the 3D mesh product is added to the Catalog pane, the Reality Mapping container, and the Meshes folder.

- To visualize the generated 3D mesh product, right-click the 3D_Mesh.slpk file in the Meshes folder, and from the drop-down menu, click Add to New > Local Scene.

Summary

In this tutorial, you created a Reality mapping workspace for oblique digital aerial imagery and used tools on the Reality Mapping tab to apply a photogrammetric adjustment. You used tools in the Products group to generate point cloud and 3D mesh products. For more information about Reality mapping, see the following topics:

- Reality mapping in ArcGIS Pro

- Create a Reality mapping workspace for aerial imagery

- Adjustment options for Reality mapping digital aerial imagery

- Add ground control points to a Reality mapping workspace

- Generate multiple products using ArcGIS Reality for ArcGIS Pro

- Generate a point cloud using ArcGIS Reality for ArcGIS Pro

- Generate a 3D mesh using ArcGIS Reality for ArcGIS Pro

The imagery used in this tutorial was acquired and provided by Esri, Inc.

Related topics

- Reality mapping in ArcGIS Pro

- Perform a Reality mapping block adjustment

- Adjustment options for Reality mapping satellite imagery

- Manage tie points in a Reality mapping workspace

- ArcGIS Reality mapping product generation

- Generate a DSM using ArcGIS Reality for ArcGIS Pro

- Create elevation data using the Ortho mapping DEMs wizard

- Introduction to the ArcGIS Reality for ArcGIS Pro extension