When a training process is completed using the Train Point Cloud Classification Model, you'll want to evaluate the results. The goal is to have created a model that can perform accurate classifications on the kind of data it was designed to classify. That doesn't mean just to perform well on the data used for training but to perform well on data that training was not exposed to.

The Train Point Cloud Classification Model tool outputs several things to help you perform this evaluation.

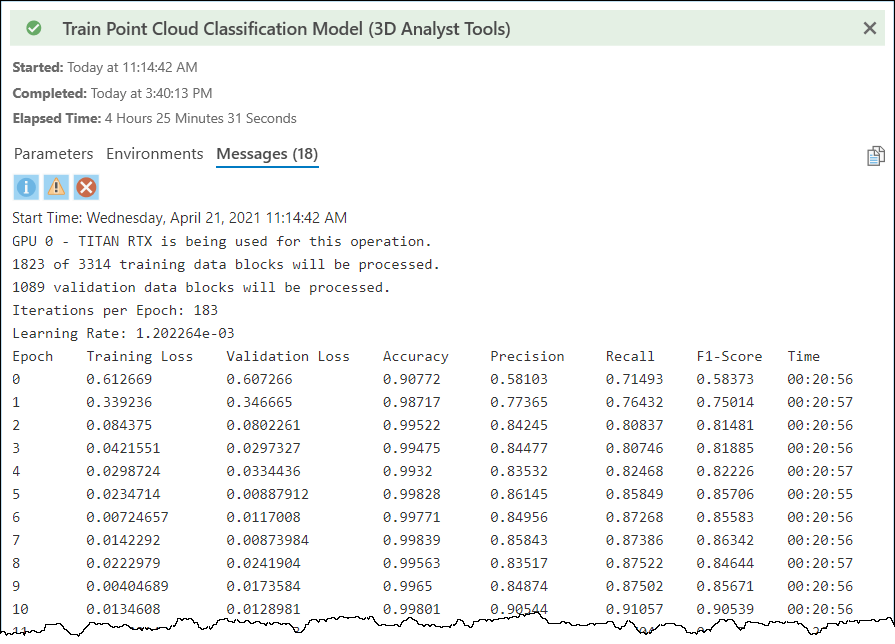

The tool also reports summary information per epoch while the tool is running. The time taken per epoch is also output, which will help you determine how long the process will take if all epochs are executed. You can see the metrics reported in the tool's messages. The Precision, Recall, and F1-Scores are averaged for all classes using an equal weight per class, a macro-average.

To interpret these metrics, you'll need some understanding of their meaning. Introductory level information is provided below, but it is recommend that you take a deeper dive into learning about these statistics and how to use and interpret them on your own via many resources available on the topic.

Accuracy—The percentage of correctly predicted points relative to the total. Note, this is not a useful metric if points for important classes exist in small numbers relative to other classes. This is because you can get a high percentage of correct predictions overall while incorrectly predicting few, but important, points.

Precision—The fraction of correct predictions for a class with respect to all the points predicted to be in that class, both correct and incorrect.

Recall—The fraction of correct predictions for a class with respect to all the points that truly belong in the class.

F1-Score—The harmonic mean between precision and recall.

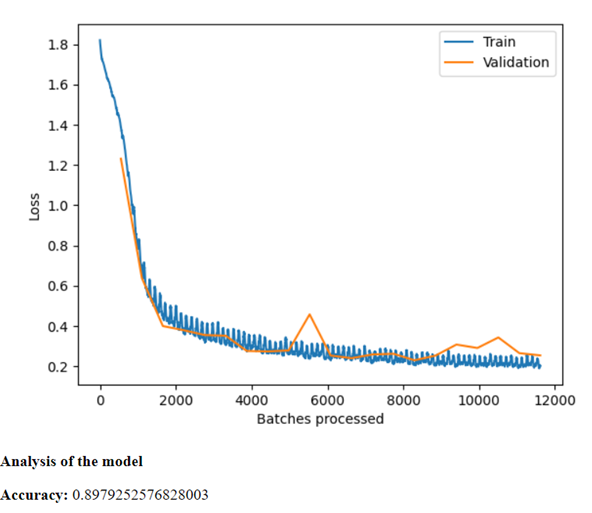

A graph of learning curves is also output by the training tool. It can be viewed in tool messages and is also written to the output model folder to a file named model_metrics.html. The graph includes loss curves for both training and validation. Lower loss is better, so you want to see curves that decrease over time and level off. Training loss shows how well the model learned, and validation loss shows how generalized the learning was.

You can check for under- and overfitting of the model by reviewing the loss curves. An underfit model is either unable to learn the training dataset or needs more training. Underfit models can be identified by training loss that is flat or noisy from start to finish or decreasing all the way to the end without leveling off. Overfit models have learned the training data too well but won't generalize to do a good job with other data. You can identify overfit models with a validation loss that decreases to a point but then starts going up, or leveling off, relative to the training loss. A good fit is generally identified by training and validation loss that both decrease to a point of stability with a minimal gap between the two final loss values.

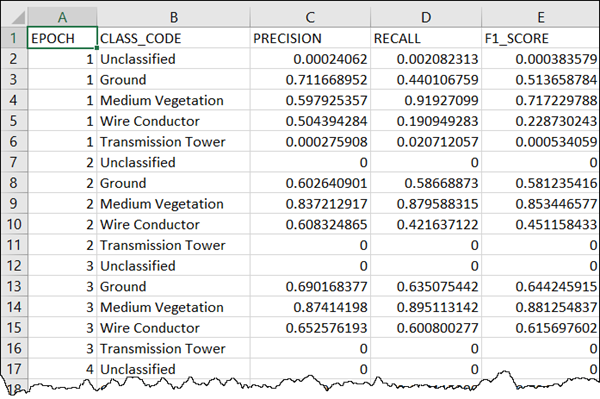

One is a *.csv text file called model_name_Statistics.csv that is placed in the output model folder. It contains Precision, Recall, and F1-Scores for each class per epoch.

In addition to reviewing metrics and learning curves, you can try using the resulting model on data that's different from both your training and validation data. Review the results by examining the point cloud in a 3D scene with points symbolized by class code.