| Label | Explanation | Data Type |

Analysis Result Features

| The feature class containing the training output results from the Forest-based and Boosted Classification and Regression, Generalized Linear Regression, or Presence-only Prediction tool. The prediction training results will be evaluated with cross validation. | Feature Layer |

Output Features

| The output features that will contain the original independent variables, dependent variable, and additional fields summarizing the cross-validation results. | Feature Class |

Output Validation Table

| The output table that will contain evaluation metrics for each cross-validation run. | Table |

Analysis Input Features

| The input features that will be used in the predictive analysis that produced the analysis result features. | Feature Layer |

Evaluation Type

(Optional) | Specifies the method that will be used to split the Analysis Result Features parameter value into k groups.

| String |

Number of Groups

(Optional) | The number of groups that the Analysis Result Features parameter value will be split into. The number of groups must be larger than 1. The default is 10. | Long |

Balancing Type

(Optional) | Specifies the method that will be used to balance the number of samples of each dependent variable category in the training group. This parameter is active if the original model predicted a categorical variable.

| String |

Summary

Evaluates predictive model performance using cross-validation. This tool generates validation metrics for models created using the Forest-based and Boosted Classification and Regression, Generalized Linear Regression, and Presence-only Prediction tools. It allows you to specify the evaluation type (for example, K-fold or spatial K-fold), number of groups, and rare event balancing to ensure robust and unbiased model assessment.

Learn more about how Evaluate Predictions with Cross-validation works

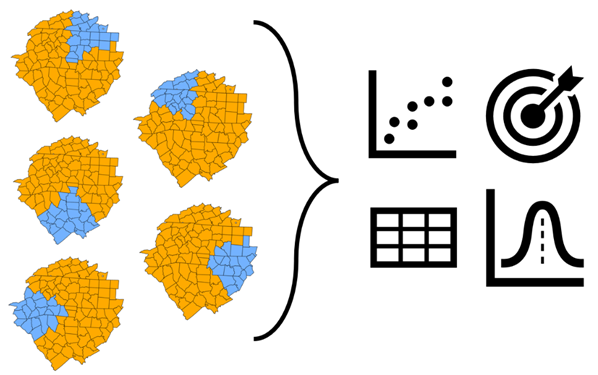

Illustration

Usage

The Evaluation Type parameter has the following options for splitting features into groups:

- Spatial k-fold—Use spatial cross validation to evaluate how a model will predict to features that are geographically outside of the training data’s study area.

- Random k-fold—Use random cross validation to evaluate how a model will predict to features that are geographically within the training data’s study area.

When using classification to predict rare events or unbalanced categories, use the Balancing Type parameter to balance the number of samples within each categorical level. Test the different balancing methods in this tool; then, select the balancing method that performed best and run it on the full training dataset before predicting using the Prepare Data for Predictiontool.

Cross-validation is not used to generate a single model or model file. It generates accuracy metrics that can be used to assess how well a model and its parameters predict data that was excluded when the model was trained.

This tool will not accept analysis features that were first oversampled in the Prepare Data for Prediction tool, that is, balanced using random oversampling or SMOTE. Oversampled data can not be used in a validation set due to data leakage.

Parameters in the original prediction tool are honored. However, for analysis results from the Forest-based and Boosted Classification and Regression tool, the validation data is set to 0. If the Optimize Parameters parameter was used in the Forest-based and Boosted Classification and Regression tool, the optimal parameters from the original tool run will be used in running cross validation.

The tool creates the following outputs:

- Output Features—Records the training dataset and the training and predicting results of each feature in the training dataset.

- Output Validation Table—Records the evaluation metrics for each validation run.

Parameters

arcpy.stats.CrossValidate(analysis_result_features, out_features, out_table, analysis_input_features, {evaluation_type}, {num_groups}, {balancing_type})| Name | Explanation | Data Type |

analysis_result_features | The feature class containing the training output results from the Forest-based and Boosted Classification and Regression, Generalized Linear Regression, or Presence-only Prediction tool. The prediction training results will be evaluated with cross validation. | Feature Layer |

out_features | The output features that will contain the original independent variables, dependent variable, and additional fields summarizing the cross-validation results. | Feature Class |

out_table | The output table that will contain evaluation metrics for each cross-validation run. | Table |

analysis_input_features | The input features that will be used in the predictive analysis that produced the analysis result features. | Feature Layer |

evaluation_type (Optional) | Specifies the method that will be used to split the analysis_result_features parameter value into k groups.

| String |

num_groups (Optional) | The number of groups that the analysis_result_features parameter value will be split into. The number of groups must be larger than 1. The default is 10. | Long |

balancing_type (Optional) | Specifies the method that will be used to balance the number of samples of each dependent variable category in the training group. This parameter is active if the original model predicted a categorical variable.

| String |

Code sample

The following Python window script demonstrates how to use the CrossValidate function.

# Evaluate a predictive model with cross validation

import arcpy

arcpy.env.workspace = r"c:\data\project_data.gdb"

arcpy.stats.CrossValidate(

analysis_result_features=r"in_analysis_result_features",

out_features=r"out_feature",

out_table=r"out_table",

analysis_input_features=r"analyis_in_feature",

evaluation_type="RANDOM_KFOLD",

num_groups=10,

balancing_type="NONE"

)The following stand-alone script demonstrates how to use the CrossValidate function.

# Evaluate a predictive model with cross validation

import arcpy

# Set the current workspace.

arcpy.env.workspace = r"c:\data\project_data.gdb"

# Run tool

arcpy.stats.CrossValidate(

analysis_result_features=r"in_analysis_result_features",

out_features=r"out_feature",

out_table=r"out_table",

analysis_input_features=r"analyis_in_feature",

evaluation_type="RANDOM_KFOLD",

num_groups=10,

balancing_type="NONE"

)Licensing information

- Basic: Limited

- Standard: Limited

- Advanced: Limited