The Prepare Data for Prediction tool facilitates the splitting of input features to create predictive models. The tool extracts information from explanatory variables, distance features, and explanatory rasters to make the train-test split. It also allows for resampling of original data to account for imbalances in the data. Balancing data is helpful to improve model performance when predicting rare case events.

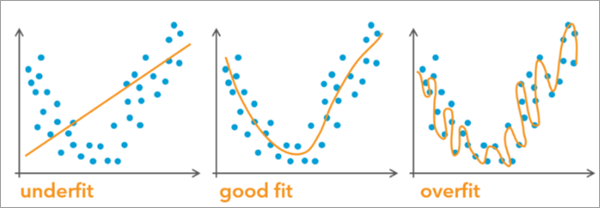

The objective in predictive modeling is to capture as many underlying patterns as possible while ensuring the model can generalize effectively to new data in the future. Predictive models rely on input data for learning. This input data is called training data. When building a model and training it on the input data, the goal is to achieve a general fit that captures the underlying patterns in the training data while maintaining great prediction performance on unseen, new data. The goal is not to replicate the training data perfectly, which will lead to overfitting. At the same time, avoid being excessively general, which can result in underfitting and missing key patterns in the data.

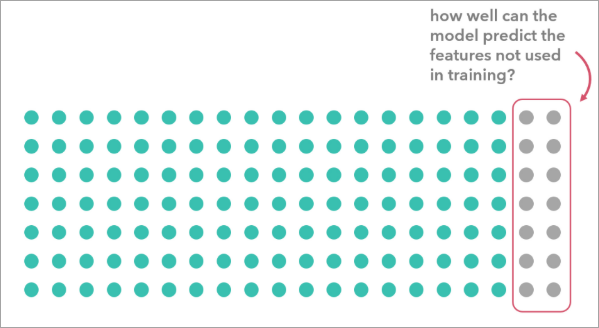

When developing a predictive model, we want to ensure that it performs well on unseen data (data that was not used to train the model). Achieving a good fit includes evaluating the model against reserved data where the true values of the predicted variable are known but the values were not used to train the model. This allows us to assess the model's performance on unknown data using various metrics. The reserved data containing these true values is commonly referred to as test data or validation data. Typically, the test data is separated from the training dataset and reserved specifically for model evaluation. The Prepare Data for Prediction tool facilitates the process of splitting input features into training and test sets for better model training.

Splitting the data

Splitting data into training and testing data subsets is recommended when training and evaluating predictive models.

The Splitting Type parameter has two options to split the data:

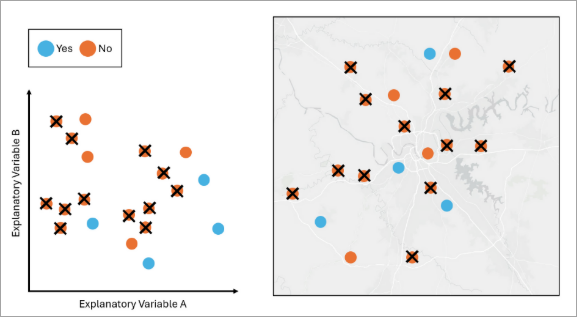

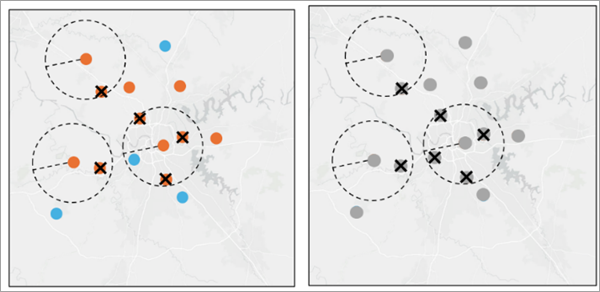

- Random Split—A testing subset is selected randomly and therefore is dispersed spatially throughout the study area.

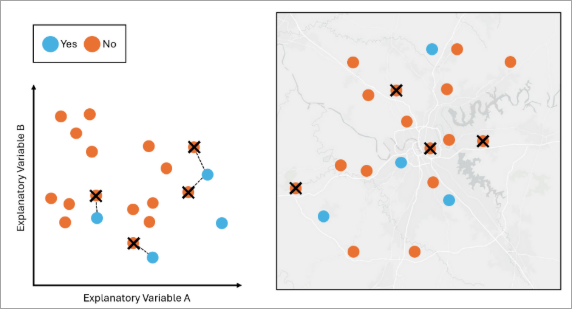

- Spatial Split—A spatial testing subset is spatially contiguous and is separate from the training subset. The spatial split is generated by randomly selecting a feature and identifying its K nearest neighbors. The benefit of using a spatial testing subset is that the testing data will emulate a future prediction dataset that is not in the same study area as the training data.

Data leakage

It is important to be thoughtful when selecting your training data due to potential data leakage. Data leakage occurs when the training data contains information that the model won’t have access to during future predictions. This can lead to a significant overestimation of the model's predictive capabilities. For example, if you train a model using afternoon airline delays to predict the airline delays in the morning of the same day, when you want to predict to a new day, you would have to wait until the afternoon to make predictions about the morning, but the delays would have already happened by then.

However, data leakage can also be more subtle. For example, neighboring census tracts are likely to exhibit similarities due to spatial autocorrelation. When a model learns from one census tract and tests on its neighbor, it will likely perform reasonably well. However, when predicting to census tracts in a different state, the model's performance may decline significantly. This is because the training data contains information from one area, but the prediction dataset lacks similar information from the different state. To mitigate data leakage due to spatial proximity, set the Splitting Type parameter to Spatial split. You can create a spatial train-test split before training using the Prepare Data for Prediction tool or evaluate various spatial splits with the Evaluate Predictions with Cross-validation tool.

Working with imbalanced data

Imbalanced data refers to a dataset where the distribution is skewed or disproportionate. In the context of classification tasks, imbalanced data occurs when one class (the minority class) has significantly fewer features than other classes (the nonminority classes). This imbalance can lead to challenges in training machine learning models effectively. For example, in a binary classification problem where we predict whether a wildfire will occur, if 99 percent of the features indicate no wildfire (majority class) and only 1 percent indicate a wildfire (minority class), the data is imbalanced. This challenge manifests in the model's results as a low sensitivity for those rarer categories, indicating that the model struggles to correctly identify many features associated with them. For instance, if you're predicting which counties will have a rare disease or identifying individuals committing fraud, accurately recognizing those rare categories becomes crucial, as they are often the most important cases for addressing the issue at hand. If the model cannot learn the patterns in all classes effectively, it could lead to poor generalization to new data and a less effective model.

In a spatial context, imbalanced data may result from the sampling bias. This can result in training samples that have clear spatial clusters that do not accurately represent the entire population. For example, data collection surveys often focus on areas near roads, paths, and other easily accessible locations, introducing inaccuracies into the model and potentially biased conclusions. This tool offers several balancing method options to resample the data and prevent these issues.

Balancing methods

The Balancing Type parameter balances the imbalanced Variable to Predict parameter value or reduces the spatial bias of the Input Features parameter value.

Note:

If the Splitting Type parameter is set to Random Split or Spatial Split, the balancing method is applied only to the output features in the training data. This approach ensures that the test features remain in their original, unaltered form for validation, helping to prevent data leakage issues.

The Balancing Type parameter supports the following options to help you prepare the training data:

- Random Undersampling—Random undersampling is a technique used to balance unbalanced data by randomly removing features from the nonminority classes until all classes have an equal number of features.

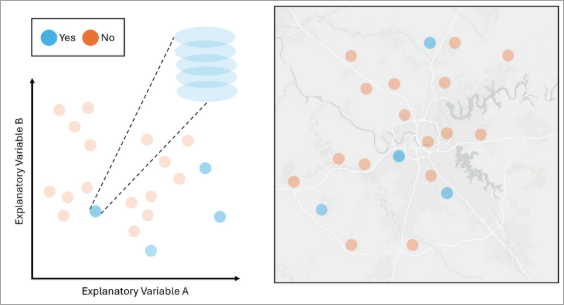

The features in blue are in the minority class and the features in orange are in the nonminority class. If we apply Random Undersampling to the data, the tool will randomly remove the orange features so that the number of orange features matches the number of blue features. - Tomek Undersampling—Tomek’s Link Undersampling is a technique used to balance unbalanced data by removing features from the nonminority classes that are close to the minority class in the attribute space. The purpose of this option is to improve the separation between classes and make a clear decision boundary for a tree-based model such as Forest-based and Boosted Classification and Regression. This option does not guarantee that all classes have an equal number of features.

The features in blue are in the minority class, and the features in orange are in the nonminority class. In the variable space, any pair of features from different classes that are nearest neighbors of each other is called Tomek’s Link. If we apply Tomek’s Undersampling to the data, the tool will remove the orange feature if it has a Tomek’s link with a blue feature. - Spatial Thinning—Spatial thinning is a technique to reduce the effect of sampling bias on the model by enforcing a minimum specified spatial separation between features.

When a categorical variable is selected as the variable to predict, the spatial thinning is applied to each group independently to ensure a balanced representation within each category; otherwise, it will be implemented across the entire training dataset regardless of attribute values.

Any features that fall within a designated buffer distance will be removed. - K-medoids Undersampling—K-medoids Undersampling is a technique used to balance unbalanced data by only keeping a number of representative features in the nonminority class so that all classes have an equal number of features. If we apply K-medoids Undersampling to the data, the tool will only keep K features that are medoids in the variable space from the nonminority class. Use K-medoids instead of another clustering algorithm to ensure that there is a central representative preexisting feature from each cluster.

The number of K is equal to the number of features in the minority class, which is 4. The clusters are created within each of the dependent variables’ classes and are clustered based on the values of the explanatory variables. The remaining features in the nonminority class come from the medoid of each cluster. - Random Oversampling—Random Oversampling is a technique used to balance unbalanced data by duplicating randomly selected features in the minority classes until all classes have an equal number of features.

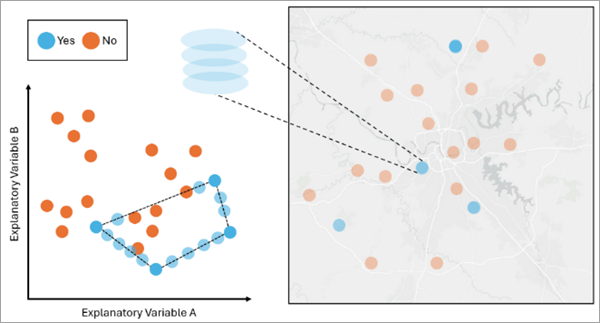

The features in blue are in the minority class, and the features in orange are in the nonminority class. If we apply Random Oversampling to the data, the tool will randomly select and duplicate the blue features so that the number of blue features matches the number of orange features. The variables and the geography of a duplicated feature are the same as the original feature. - SMOTE Oversampling—SMOTE (Synthetic Minority Over-sampling Technique) oversampling is a technique used to balance unbalanced data by generating synthetic features in the minority class until all classes have an equal number of features. A feature in a minority class is chosen, a near feature of the same minority class in the attribute space is selected, and new attributes are generated as an interpolation between those two features. The geometry of the new synthetic feature will be that of the originally selected feature.

The features in blue are in the minority class, and the features in orange are in the nonminority class. If we apply SMOTE Oversampling to the data, the tool will generate the synthetic features by interpolating the values between two randomly selected features from the minority class in the attribute space. The geography of a synthetic feature is the same as the originally selected feature, while the variables are interpolated from the selected feature.

Outputs

The tool will generate geoprocessing messages and two outputs: an output feature class and, optionally, an output test subset features feature class.

Geoprocessing messages

You can access the messages by hovering over the progress bar, clicking the pop-out button, or expanding the messages section in the Geoprocessing pane. You can also access the messages for a previous run of this tool in the geoprocessing history. The messages include a Dependent Variable Range Diagnostics table and an Explanatory Variable Range Diagnostics table.

The Dependent Variable Range Diagnostics table lists the variable that will be predicted, while the Explanatory Variable Range Diagnostics table lists all the specified explanatory variables. If a variable is continuous, the table summarizes the minimum and maximum value in the field. If a variable is categorical, the table lists each category and the percentage of the features with that category. If the Splitting Type parameter is set to Random Split or Spatial Split, the table will also include the same diagnostics for the test subset features.

Additional outputs

This tool also produces an output feature class and an optional output test subset features feature class.

Output features

The output features can be used as training features in the Forest-based and Boosted Classification and Regression, Generalized Linear Regression, and Presence-only Prediction tools, as well as in other models. The fields in this feature class include all the explanatory variables, all the explanatory distance features, and the variable to predict. If the Append All Fields from the Input Features parameter is checked, the output features will include all the fields from the input features. If the Encode Categorical Explanatory Variables parameter is checked, a field will be created for each category in the categorical explanatory variable. Each feature will have a value of 0 or 1. One indicates the feature is in that category, while 0 indicates it is in a different category. If the Splitting Type parameter is set to None, the output features will include all the features from the input features.

Output test subset features

The output test subset features are a subset of the input features that can be used as test features. For example, you can use the output test subset features to evaluate model accuracy in the Predict Using Spatial Statistics Model File tool.

A percentage of the input features are reserved for the output test subset features. Specify the percentage with the Percent of Data as Test Subset parameter. The fields in this feature class include all the explanatory variables, all the explanatory distance features, and the variable to predict. If the Encode Categorical Explanatory Variables parameter is checked, a field will be created for each category. Each feature will have a value of 0 or 1. One indicates the feature is in that category, while 0 indicates it is in a different category.

This feature class is only created if the Splitting Type parameter is set to Random Split or Spatial Split.

Best practices

The following are best practices when using this tool:

- It is important to ensure that when using categorical variables as the Variable to Predict or as the Explanatory Variables parameter value, every categorical level appears in the training data. This is important because the models need to see and learn from every possible category before predicting with new data. If a category appears in the explanatory variables in the testing or validation data that was not in the training data, the model will fail. The tool will fail if it cannot get all categorical levels in the training dataset after 30 attempted iterations.

- Once data is balanced, it should not be used as validation data or test data, because it no longer represents the distribution of data that will be measured in the real world. Oversampled data should never be used to evaluate model performance as validation data. Undersampled data can be used, however, it is not advised. For this reason, the training and test datasets are split before balancing, and only the training set is balanced.

- When encoding categorical variables, binary variables (0s and 1s) will be created for each category and added to the attribute tables of the training and testing output features. For each category, 1 indicates the feature is in that category and 0 indicates it is in a different category. When using a linear model such as generalized linear regression, you must omit at least one of these binary variables from the explanatory variables to avoid perfect multicollinearity.

- Once a final model has been selected (for example, model type finalized, parameter selected, variables selected), you may want to retrain a final model using the full dataset. If you originally split up your data into train and test, you might recombine these datasets or run the Prepare Data for Prediction tool again with the Splitting Type parameter set to No Split and then run the final model selection. The final model file from these model runs, or the predictions made, would use the full extent of the available data to train. This analysis step is not required, but many analysts choose to do this.

- When extracting data from rasters, the value extracted to a point may not exactly match the cell in the underlying raster. This is because we apply bilinear interpolation when extracting numerical values from rasters to points.

References

The following resources were used to implement the tool:

- Chawla, N., K. Bowyer, L. Hall & W.P. Kegelmeyer. 2002. “SMOTE: Synthetic Minority Over-sampling Technique”. Journal of Artificial Intelligence Research. 16: 321-357. https://doi.org/10.1613/jair.953.

- Tomek, I. 1976. “Two Modifications of CNN”. IEEE Transactions on Systems, Man, and Cybernetics. 11: 769 – 772. https://doi.org/10.1109/TSMC.1976.4309452.

- Wei-Chao L., T. Chih-Fong, H. Ya-Han, and J. Jing-Shang. 2017. “Clustering-based undersampling in class-imbalanced data”. Information Sciences. 409: 17-26. https://doi.org/10.1016/j.ins.2017.05.008.