The Spatial Statistics toolbox provides effective tools for quantifying spatial patterns. Using the Hot Spot Analysis tool, for example, you can ask questions like these:

- Are there places in the United States where people are persistently dying young?

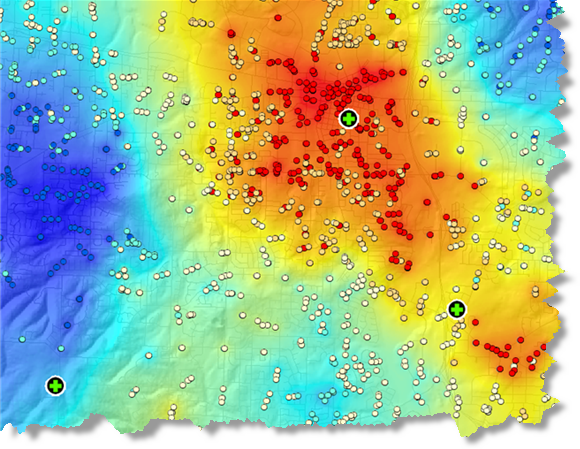

- Where are the hot spots for crime, 911 emergency calls (see graphic below), or fires?

- Where do we find a higher than expected proportion of traffic accidents in a city?

Each of the questions above asks "where?" The next logical question for the types of analyses above involves "why?"

- Why are there places in the United States where people persistently die young? What might be causing this?

- Can we model the characteristics of places that experience a lot of crime, 911 calls, or fire events to help reduce these incidents?

- What are the factors contributing to higher than expected traffic accidents? Are there policy implications or mitigating actions that might reduce traffic accidents across the city and/or in particular high accident areas?

Tools in the Modeling Spatial Relationships toolset help you answer this second set of why questions. These tools include Ordinary Least Squares (OLS) regression and Geographically Weighted Regression.

Spatial relationships

Regression analysis allows you to model, examine, and explore spatial relationships and can help explain the factors behind observed spatial patterns. You may want to understand why people are persistently dying young in certain regions of the country or what factors contribute to higher than expected rates of diabetes. By modeling spatial relationships, however, regression analysis can also be used for prediction. Modeling the factors that contribute to college graduation rates, for example, enables you to make predictions about upcoming workforce skills and resources. You might also use regression to predict rainfall or air quality in cases where interpolation is insufficient due to a scarcity of monitoring stations (for example, rain gauges are often lacking along mountain ridges and in valleys).

OLS is the best known of all regression techniques. It is also the proper starting point for all spatial regression analyses. It provides a global model of the variable or process you are trying to understand or predict (early death/rainfall); it creates a single regression equation to represent that process. Geographically weighted regression (GWR) is one of several spatial regression techniques, increasingly used in geography and other disciplines. GWR provides a local model of the variable or process you are trying to understand/predict by fitting a regression equation to every feature in the dataset. When used properly, these methods provide powerful and reliable statistics for examining and estimating linear relationships.

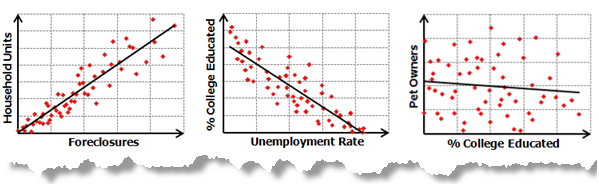

Linear relationships are either positive or negative. If you find that the number of search and rescue events increases when daytime temperatures rise, the relationship is said to be positive; there is a positive correlation. Another way to express this positive relationship is to say that search and rescue events decrease as daytime temperatures decrease. Conversely, if you find that the number of crimes goes down as the number of police officers patrolling an area goes up, the relationship is said to be negative. You can also express this negative relationship by stating that the number of crimes increases as the number of patrolling officers decreases. The graphic below depicts both positive and negative relationships, as well as the case where there is no relationship between two variables:

Correlation analyses, and their associated graphics depicted above test the strength of the relationship between two variables. Regression analyses, on the other hand, make a stronger claim: they attempt to demonstrate the degree to which one or more variables potentially promote positive or negative change in another variable.

Regression analysis applications

Regression analysis can be used for a large variety of applications:

- Modeling high school retention rates to better understand the factors that help keep kids in school.

- Modeling traffic accidents as a function of speed, road conditions, weather, and so forth, to inform policy aimed at decreasing accidents.

- Modeling property loss from fire as a function of variables such as degree of fire department involvement, response time, or property values. If you find that response time is the key factor, you might need to build more fire stations. If you find that involvement is the key factor, you may need to increase equipment and the number of officers dispatched.

There are three primary reasons you might want to use regression analysis:

- To model some phenomenon to better understand it and possibly use that understanding to effect policy or make decisions about appropriate actions to take. The basic objective is to measure the extent that changes in one or more variables jointly affect changes in another. Example: Understand the key characteristics of the habitat for some particular endangered species of bird (perhaps precipitation, food sources, vegetation, predators) to assist in designing legislation aimed at protecting that species.

- To model some phenomenon to predict values at other places or other times. The basic objective is to build a prediction model that is both consistent and accurate. Example: Given population growth projections and typical weather conditions, what will the demand for electricity be next year?

- You can also use regression analysis to explore hypotheses. Suppose you are modeling residential crime to better understand it and hopefully implement policy that might prevent it. As you begin your analysis, you probably have questions or hypotheses that you want to examine:

- "Broken window theory" indicates that defacement of public property (graffiti, damaged structures, and so on) invite other crimes. Will there be a positive relationship between vandalism incidents and residential burglary?

- Is there a relationship between illegal drug use and burglary (might drug addicts steal to support their habits)?

- Are burglars predatory? Might there be more incidents in residential neighborhoods with higher proportions of elderly or female-headed households?

- Are persons at greater risk for burglary if they live in a rich or a poor neighborhood?

Regression analysis terms and concepts

It is impossible to discuss regression analysis without first becoming familiar with a few terms and basic concepts specific to regression statistics:

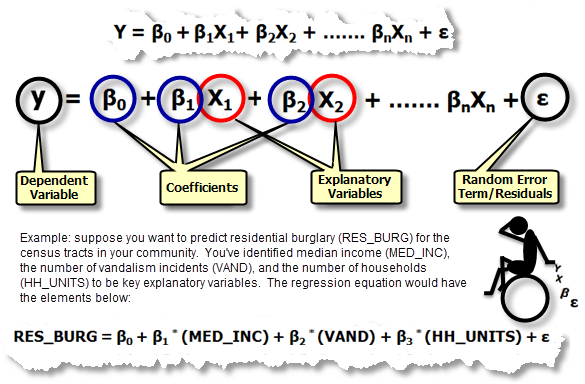

Regression equation: This is the mathematical formula applied to the explanatory variables to best predict the dependent variable you are trying to model. Unfortunately for those in the geosciences who think of x and y as coordinates, the notation in regression equations for the dependent variable is always y and for the independent or explanatory variables is always X. Each independent variable is associated with a regression coefficient describing the strength and the sign of that variable's relationship to the dependent variable. A regression equation might look like this (y is the dependent variable, the Xs are the explanatory variables, and the βs are regression coefficients; each of these components of the regression equation are explained further below):

- Dependent variable (y): This is the variable representing the process you are trying to predict or understand (residential burglary, foreclosure, rainfall). In the regression equation, it appears on the left side of the equal sign. While you can use regression to predict the dependent variable, you always start with a set of known y-values and use these to build (or to calibrate) the regression model. The known y-values are often referred to as observed values.

- Independent/Explanatory variables (X): These are the variables used to model or to predict the dependent variable values. In the regression equation, they appear on the right side of the equal sign and are often referred to as explanatory variables. The dependent variable is a function of the explanatory variables. If you are interested in predicting annual purchases for a proposed store, you might include in your model explanatory variables representing the number of potential customers, distance to competition, store visibility, and local spending patterns, for example.

- Regression coefficients (β): Coefficients are computed by the regression tool. They are values, one for each explanatory variable, that represent the strength and the type of relationship the explanatory variable has to the dependent variable. Suppose you are modeling fire frequency as a function of solar radiation, vegetation, precipitation, and aspect. You might expect a positive relationship between fire frequency and solar radiation (in other words, the more sun, the more frequent the fire incidents). When the relationship is positive, the sign for the associated coefficient is also positive. You might expect a negative relationship between fire frequency and precipitation (in other words, places with more rain have fewer fires). Coefficients for negative relationships have negative signs. When the relationship is a strong one, the coefficient is relatively large (relative to the units of the explanatory variable it is associated with). Weak relationships are associated with coefficients near zero; β0 is the regression intercept. It represents the expected value for the dependent variable if all the independent (explanatory) variables are zero.

P-values: Most regression methods perform a statistical test to compute a probability, called a p-value, for the coefficients associated with each independent variable. The null hypothesis for this statistical test states that a coefficient is not significantly different from zero (in other words, for all intents and purposes, the coefficient is zero and the associated explanatory variable is not helping your model). Small p-values reflect small probabilities and suggest that the coefficient is, indeed, important to your model with a value that is significantly different from zero (in other words, a small p-value indicates the coefficient is not zero). You would say that a coefficient with a p-value of 0.01, for example, is statistically significant at the 99 percent confidence level; the associated variable is an effective predictor. Variables with coefficients near zero do not help predict or model the dependent variable; they are almost always removed from the regression equation, unless there are strong theoretical reasons to keep them.

R2/R-squared: Multiple R-squared and adjusted R-squared are both statistics derived from the regression equation to quantify model performance. The value of R-squared ranges from 0 to 100 percent. If your model fits the observed dependent variable values perfectly, R-squared is 1.0 (and you, no doubt, have made an error; perhaps you've used a form of y to predict y). More likely, you will see R-squared values like 0.49, for example, which you can interpret by saying, "This model explains 49 percent of the variation in the dependent variable". To understand what the R-squared value is getting at, create a bar graph showing both the estimated and observed y-values sorted by the estimated values. Notice how much overlap there is. This graphic provides a visual representation of how well the model's predicted values explain the variation in the observed dependent variable values. View an illustration. The adjusted R-squared value is always a bit lower than the multiple R-squared value because it reflects model complexity (the number of variables) as it relates to the data. Consequently, the adjusted R-squared value is a more accurate measure of model performance.

Residuals: These are the unexplained portion of the dependent variable, represented in the regression equation as the random error term ε. View an illustration. Known values for the dependent variable are used to build and to calibrate the regression model. Using known values for the dependent variable (y) and known values for all of the explanatory variables (the Xs), the regression tool constructs an equation that will predict those known y-values as well as possible. The predicted values will rarely match the observed values exactly, however. The difference between the observed y-values and the predicted y-values are called the residuals. The magnitude of the residuals from a regression equation is one measure of model fit. Large residuals indicate poor model fit.

Building a regression model is an iterative process that involves finding effective independent variables to explain the dependent variable you are trying to model or understand, running the regression tool to determine which variables are effective predictors, then repeatedly removing and/or adding variables until you find the best regression model possible. While the model building process is often exploratory, it should never be a "fishing expedition". You should identify candidate explanatory variables by consulting theory, experts in the field, and common sense. You should be able to state and justify the expected relationship between each candidate explanatory variable and the dependent variable prior to analysis, and should question models where these relationships do not match.

Note:

If you've not used regression analysis before, this would be a very good time to download the Regression Analysis Tutorial and work through steps 1–5.

Regression analysis issues

OLS regression is a straightforward method, has well-developed theory behind it, and has a number of effective diagnostics to assist with interpretation and troubleshooting. OLS is only effective and reliable, however, if your data and regression model meet/satisfy all the assumptions inherently required by this method (see the table below). Spatial data often violates the assumptions and requirements of OLS regression, so it is important to use regression tools in conjunction with appropriate diagnostic tools that can assess whether regression is an appropriate method for your analysis, given the structure of the data and the model being implemented.

How regression models go bad

A serious violation for many regression models is misspecification. A misspecified model is one that is not complete—it is missing important explanatory variables, so it does not adequately represent what you are trying to model or trying to predict (the dependent variable, y). In other words, the regression model is not telling the whole story. Misspecification is evident whenever you see statistically significant spatial autocorrelation in your regression residuals or, said another way, whenever you notice that the over- and underpredictions (residuals) from your model tend to cluster spatially so that the overpredictions cluster in some portions of the study area and the underpredictions cluster in others. Mapping regression residuals or the coefficients associated with Geographically Weighted Regression analysis will often provide clues about what you've missed. Running Hot Spot Analysis on regression residuals might also help reveal different spatial regimes that can be modeled in OLS with regional variables or can be remedied using the geographically weighted regression method. Suppose when you map your regression residuals you see that the model is always overpredicting in the mountain areas and underpredicting in the valleys—you will likely conclude that your model is missing an elevation variable. There will be times, however, when the missing variables are too complex to model or impossible to quantify or too difficult to measure. In these cases, you may be able to move to GWR or to another spatial regression method to get a well-specified model.

The following table lists common problems with regression models and the tools available in ArcGIS to help address them:

Common regression problems, consequences, and solutions

Omitted explanatory variables (misspecification). | When key explanatory variables are missing from a regression model, coefficients and their associated p-values cannot be trusted. | Map and examine OLS residuals and GWR coefficients or run Hot Spot Analysis on OLS regression residuals to see if this provides clues about possible missing variables. |

Nonlinear relationships. View an illustration. | OLS and GWR are both linear methods. If the relationship between any of the explanatory variables and the dependent variable is nonlinear, the resultant model will perform poorly. | Create a scatter plot matrix graph to elucidate the relationships among all variables in the model. Pay careful attention to relationships involving the dependent variable. Curvilinearity can often be remedied by transforming the variables. View an illustration. Alternatively, use a nonlinear regression method. |

Data outliers. View an illustration. | Influential outliers can pull modeled regression relationships away from their true best fit, biasing regression coefficients. | Create a scatter plot matrix and other graphs (histograms) to examine extreme data values. Correct or remove outliers if they represent errors. When outliers are correct/valid values, they cannot/should not be removed. Run the regression with and without the outliers to see how much they are affecting your results. |

Nonstationarity. You might find that an income variable, for example, has strong explanatory power in region A but is insignificant or even switches signs in region B. View an illustration. | If relationships between your dependent and explanatory variables are inconsistent across your study area, computed standard errors will be artificially inflated. | The OLS tool in ArcGIS automatically tests for problems associated with nonstationarity (regional variation) and computes robust standard error values. View an illustration. When the probability associated with the Koenker test is small (< 0.05, for example), you have statistically significant regional variation and should consult the robust probabilities to determine if an explanatory variable is statistically significant or not. Often you will improve model results by using the Geographically Weighted Regression tool. |

Multicollinearity. One or a combination of explanatory variables is redundant. View an illustration. | Multicollinearity leads to an overcounting type of bias and an unstable/unreliable model. | The OLS tool in ArcGIS automatically checks for redundancy. Each explanatory variable is given a computed VIF value. When this value is large (> 7.5, for example), redundancy is a problem and the offending variables should be removed from the model or modified by creating an interaction variable or increasing the sample size. View an illustration. |

Inconsistent variance in residuals. It may be that the model predicts well for small values of the dependent variable but becomes unreliable for large values. View an illustration. | When the model predicts poorly for some range of values, results will be biased. | The OLS tool in ArcGIS automatically tests for inconsistent residual variance (called heteroscedasticity) and computes standard errors that are robust to this problem. When the probability associated with the Koenker test is small (< 0.05, for example), you should consult the robust probabilities to determine if an explanatory variable is statistically significant or not. View an illustration. |

Spatially autocorrelated residuals. View an illustration. | When there is spatial clustering of the under-/overpredictions coming out of the model, it introduces an overcounting type of bias and renders the model unreliable. | Run the Spatial Autocorrelation tool on the residuals to ensure they do not exhibit statistically significant spatial clustering. Statistically significant spatial autocorrelation is almost always a symptom of misspecification (a key variable is missing from the model). View an illustration. |

Normal distribution bias. View an illustration. | When the regression model residuals are not normally distributed with a mean of zero, the p-values associated with the coefficients are unreliable. | The OLS tool in ArcGIS automatically tests whether the residuals are normally distributed. When the Jarque-Bera statistic is significant (< 0.05, for example), your model is likely misspecified (a key variable is missing from the model) or some of the relationships you are modeling are nonlinear. Examine the output residual map and perhaps GWR coefficient maps to see if this exercise reveals the key variables missing from the analysis. View scatterplot matrix graphs and look for nonlinear relationships. |

It is important to test for each of the problems listed above. Results can be 100 percent wrong (180 degrees different) if problems above are ignored.

Note:

If you've not used regression analysis before, this would be a very good time to download and work through the Regression Analysis Tutorial.

Spatial regression

Spatial data exhibits two properties that make it difficult (but not impossible) to meet the assumptions and requirements of traditional (nonspatial) statistical methods, like OLS regression:

- Geographic features are more often than not spatially autocorrelated; this means that features near each other tend to be more similar than features that are farther away. This creates an overcount type of bias for traditional (nonspatial) regression methods.

- Geography is important, and often the processes most important to what you are modeling are nonstationary; these processes behave differently in different parts of the study area. This characteristic of spatial data can be referred to as regional variation or nonstationarity.

True spatial regression methods were developed to robustly manage these two characteristics of spatial data and even to incorporate these special qualities of spatial data to improve their ability to model data relationships. Some spatial regression methods deal effectively with the first characteristic (spatial autocorrelation), others deal effectively with the second (nonstationarity). At present, no spatial regression methods are effective for both characteristics. For a properly specified GWR model, however, spatial autocorrelation is typically not a problem.

Spatial autocorrelation

There seems to be a big difference between how a traditional statistician views spatial autocorrelation and how a spatial statistician views spatial autocorrelation. The traditional statistician sees it as a bad thing that needs to be removed from the data (through resampling, for example) because spatial autocorrelation violates underlying assumptions of many traditional (nonspatial) statistical methods. For the geographer or GIS analyst, however, spatial autocorrelation is evidence of important underlying spatial processes at work; it is an integral component of the data. Removing space removes data from its spatial context; it is like getting only half the story. The spatial processes and spatial relationships evident in the data are a primary interest and one of the reasons GIS users get so excited about spatial data analysis. To avoid an overcounting type of bias in your model, however, you must identify the full set of explanatory variables that will effectively capture the inherent spatial structure in your dependent variable. If you cannot identify all of these variables, you will very likely see statistically significant spatial autocorrelation in the model residuals. Unfortunately, you cannot trust your regression results until this is remedied. Use the Spatial Autocorrelation tool to test for statistically significant spatial autocorrelation in your regression residuals.

There are at least three strategies for dealing with spatial autocorrelation in regression model residuals:

- Resample until the input variables no longer exhibit statistically significant spatial autocorrelation. While this does not ensure the analysis is free of spatial autocorrelation problems, they are far less likely when spatial autocorrelation is removed from the dependent and explanatory variables. This is the traditional statistician's approach to dealing with spatial autocorrelation and is only appropriate if spatial autocorrelation is the result of data redundancy (the sampling scheme is too fine).

- Isolate the spatial and nonspatial components of each input variable using a spatial filtering regression method. Space is removed from each variable, but then it is put back into the regression model as a new variable to account for spatial effects/spatial structure. ArcGIS currently does not provide spatial filtering regression methods.

- Incorporate spatial autocorrelation into the regression model using spatial econometric regression methods. Spatial econometric regression methods will be added to ArcGIS in a future release.

Regional variation

Global models, like OLS regression, create equations that best describe the overall data relationships in a study area. When those relationships are consistent across the study area, the OLS regression equation models those relationships well. When those relationships behave differently in different parts of the study area, however, the regression equation is more of an average of the mix of relationships present, and in the case where those relationships represent two extremes, the global average will not model either extreme well. When your explanatory variables exhibit nonstationary relationships (regional variation), global models tend to fall apart unless robust methods are used to compute regression results. Ideally, you will be able to identify a full set of explanatory variables to capture the regional variation inherent in your dependent variable. If you cannot identify all of these spatial variables, however, you will again notice statistically significant spatial autocorrelation in your model residuals and/or lower than expected R-squared values. Unfortunately, you cannot trust your regression results until this is remedied.

There are at least four ways to deal with regional variation in OLS regression models:

- Include a variable in the model that explains the regional variation. If you see that your model is always overpredicting in the north and underpredicting in the south, for example, add a regional variable set to 1 for northern features and set to 0 for southern features.

- Use methods that incorporate regional variation into the regression model such as Geographically Weighted Regression.

- Consult robust regression standard errors and probabilities to determine if variable coefficients are statistically significant. Geographically weighted regression is still recommended.

- Redefine/Reduce the size of the study area so that the processes within it are all stationary (so they no longer exhibit regional variation).

Additional resources

For more information about using the regression tools, see the following: