The Geographically Weighted Regression tool uses geographically weighted regression (GWR), which is one of several spatial regression techniques used in geography and other disciplines. GWR evaluates a local model of the variable or process you are trying to understand or predict by fitting a regression equation to every feature in the dataset. GWR constructs these separate equations by incorporating the dependent and explanatory variables of the features falling within the neighborhood of each target feature. When using the Geographically Weighted Regression tool, the shape and extent of each neighborhood analyzed is based on the Neighborhood Type and Neighborhood Selection Method parameter values. The tool allows continuous (Gaussian), binary (binomial), or count (Poisson) data as the dependent variable. Use GWR on datasets with at least several hundred features.

Note:

The Multiscale Geographically Weighted Regression tool can be used to perform GWR on data with varying scales of relationships between the dependent and explanatory variables.

Potential applications

The Geographically Weighted Regression tool can be used to answer a variety of questions, including the following:

- Is the relationship between educational attainment and income consistent across the study area?

- Do certain illness or disease occurrences increase with proximity to water features?

- What are the key variables that explain high forest fire frequency?

- Which habitats should be protected to encourage the reintroduction of an endangered species?

- Where are the districts in which children are achieving high test scores? What characteristics seem to be associated? Where is each characteristic most important?

- Are the factors influencing higher cancer rates consistent across the study area?

Inputs

To run the Geographically Weighted Regression tool, provide the Input Features parameter with a field representing the dependent variable and one or more fields representing the explanatory variables. These fields must be numeric and have a range of values. Features that contain missing values in the dependent or explanatory variables will be excluded from the analysis; however, you can use the Fill Missing Values tool to complete the dataset before running the Geographically Weighted Regression tool. Next, you must choose a model type based on the data you are analyzing. It is important to use an appropriate model for the data. Descriptions of the model types and how to determine the appropriate one for the data are below.

Model types

the Geographically Weighted Regression tool provides three types of regression models: continuous, binary, and count. These types of regression are known as ordinary least squares, logistic, and Poisson, respectively. Base the value of the Model Type parameter for the analysis on how the dependent variable was measured or summarized as well as the range of values it contains.

Continuous (Gaussian)

Use the Continuous (Gaussian) option if the dependent variable can take on a wide range of values such as temperature or total sales. Ideally, the dependent variable will be normally distributed. You can create a histogram of the dependent variable to verify that it is normally distributed. If the histogram is a symmetrical bell curve, use a Gaussian model type. Most of the values will be clustered near the mean, with few values departing radically from the mean. There should be as many values on the left side of the mean as on the right (the mean and median values for the distribution are the same). If the dependent variable does not appear to be normally distributed, consider reclassifying it to a binary variable. For example, if the dependent variable is average household income, you can recode it to a binary variable in which 1 indicates above the national median income, and 0 (zero) indicates below the national median income. You can reclassify a continuous field to a binary field using the Reclassify helper function in the Calculate Field tool.

Binary (Logistic)

Use the Binary (Logistic) option if the dependent variable can take on one of two possible values such as success and failure or presence and absence. The field containing the dependent variable must be numeric and contain only ones and zeros. Results will be easier to interpret if you code the event of interest, such as success or presence of an animal, as 1, as the regression will model the probability of 1. There must be variation of the ones and zeros in the data both globally and locally. You can use the Neighborhood Summary Statistics tool to calculate standard deviations of local neighborhoods to locate areas that contain all the same value.

Count (Poisson)

Use the Count (Poisson) option if the dependent variable is discrete and represents the number of occurrences of an event such as a count of crimes. Count models can also be used if the dependent variable represents a rate and the denominator of the rate is a fixed value such as sales per month or number of people with cancer per 10,000 population. The values of the dependent variable cannot be negative or contain decimals.

Neighborhood types

A neighborhood is the distance band or number of neighbors used for each local regression equation and may be the most important parameter to consider for the Geographically Weighted Regression tool, as it controls how locally the models will be estimated. The shape and extent of the neighborhoods analyzed are based on the values of the Neighborhood Type and Neighborhood Selection Method parameters.

You can choose one of two neighborhood types: a fixed number of neighbors or a distance band. For a fixed number of neighbors, the area of each neighborhood depends on the density of nearby points: neighborhoods are smaller where features are dense and larger where features are sparse. When a distance band is used, the neighborhood size remains constant for each feature in the study area, resulting in more features per neighborhood where features are dense and fewer per neighborhood where they are sparse.

The neighborhood selection method specifies how the size of the neighborhood is determined (the actual distance or number of neighbors used). The neighborhoods selected using the Golden search or Manual intervals options are based on minimizing the value of the corrected Akaike Information Criterion (AICc). Alternatively, you can set a specific neighborhood distance or number of neighbors using the User defined option.

For the Golden search selection method, the tool determines the best values for the distance band or number of neighbors using the golden section search method. This method first finds maximum and minimum distances and tests the AICc at various distances incrementally between them. The maximum distance is the distance at which every feature has half the number of input features as neighbors, and the minimum distance is the distance at which every feature has at least 5 percent of the features in the dataset as neighbors.

The Minimum Search Distance and Maximum Search Distance parameters (for distance band) and Minimum Number of Neighbors and Maximum Number of Neighbors parameters (for number of neighbors) can be used to narrow the search range of the golden search.

Note:

If the neighborhood parameters result in more than 1,000 neighbors for a neighborhood, only the closest 1,000 neighbors will be used.

Local weighting scheme

The power of GWR is that it applies a geographical weighting to the features used in each of the local regression equations. Features that are farther away from the regression point are given less weight and have less influence on the regression results for the target feature; features that are closer have more weight in the regression equation. The weights are determined using a kernel, which is a function that determines how quickly weights decrease as distances increase. The Geographically Weighted Regression tool provides two kernel options for the Local Weighting Scheme parameter: Gaussian and Bisquare.

The Gaussian weighting scheme assigns a weight of one to the focal feature and weights for the neighboring features gradually decrease as the distance from the focal feature increases. For example, if two features are 0.25 bandwidths apart, the resulting weight in the equation will be approximately 0.88. If the features are 0.75 bandwidths apart, the resulting weight will only be approximately 0.32. A Gaussian weighting scheme never reaches zero, but weights for features far away from the regression feature can be quite small and have almost no impact on the regression. When using a Gaussian weighting scheme, every other feature in the input data is a neighboring feature and will be assigned a weight. However, for computational efficiency, when the number of neighboring features will exceed 1000, only the closest 1000 are incorporated into each local regression. A Gaussian weighting scheme ensures that each regression feature will have many neighbors and increases the chance that there will be variation in the values of those neighbors. This avoids a common problem in geographically weighted regression called local collinearity. Use a Gaussian weighting scheme when the influence of neighboring features becomes smoothly and gradually less important but that influence is always present regardless of how far away the surrounding features are.

The bisquare weighting scheme is similar to Gaussian. It assigns a weight of one to the focal feature and weights for the neighboring features gradually decrease as the distance from the focal feature increases. However, all features outside of the neighborhood specified are assigned zero and do not impact the local regression for the target feature. When comparing a bisquare weighting scheme to a Gaussian weighting scheme with the same neighborhood specifications, weights will decrease more quickly with bisquare. Using a bisquare weighting scheme allows you to specify a distance after which features will have no impact on the regression results. Since bisquare excludes features after a certain distance, there is no guarantee that there will be sufficient features (with influence) in the surrounding neighborhood to produce a good local regression analysis. Use a Gaussian weighting scheme when the influence of neighboring features becomes gradually less important, and there is a distance after which that influence is no longer present. For example, regression is often used to model housing prices, and the sales price of surrounding houses is a common explanatory variable. These surrounding houses are called comparable properties. Lending agencies sometimes establish rules that require a comparable house to be within a maximum distance. In this example, a bisquare weighting scheme can be used with a neighborhood equal to the maximum distance specified by the lending institution.

Prediction

You can use the regression model that has been created to make predictions for other features (either points or polygons) in the same study area by providing the features in the Prediction Locations parameter. The prediction locations must have matching fields for each of the explanatory variables in the input features. If the field names from the input features and the prediction locations are not the same, you must match the corresponding fields in the Explanatory Variables to Match parameter. When matching, the fields must be the same type (for example, double type fields cannot be matched to integer type fields).

Coefficient rasters

A primary benefit of GWR compared to most regression models is that it allows you to explore spatially varying relationships. One way to visualize how the relationships between the explanatory variables and the dependent variable vary across space is to create coefficient rasters. When you provide a path name as the Coefficient Raster Workspace parameter value, the Geographically Weighted Regression tool will create coefficient raster surfaces for the model intercept and each explanatory variable. The resolution of the rasters is controlled by the Cell Size environment. A neighborhood is constructed around each raster cell based on the neighborhood type and weighting scheme. Weights are calculated from the center of the raster cell to all the input features within the neighborhood, and these weights are used to calculate a unique regression equation for that raster cell. The coefficients vary from raster cell to raster cell because the neighbors and weights change from cell to cell.

Note:

There is currently no consensus on how to assess confidence in the coefficients from a GWR model. While t-tests have been used to base an inference on whether the estimated value of coefficients is significantly different than zero, the validity of this approach is still an area of active research. One approach to informally evaluate the coefficients is to divide the coefficient by the standard error provided for each feature as a way of scaling the magnitude of the estimation with the associated standard error and visualize those results, looking for clusters of high standard errors relative to their coefficients.

Outputs

The Geographically Weighted Regression tool produces a variety of outputs. A summary of the GWR model and statistical summaries are returned as messages. The tool also generates an output feature class, charts, and, optionally, prediction features and coefficient raster surfaces. The output features and associated charts are automatically added to the Contents pane with a hot and cold rendering scheme applied to model residuals. The diagnostics and charts generated depend on the specified model type.

Continuous (Gaussian)

The Gaussian model type assumes that the values of the dependent variable are continuous.

Output features

In addition to regression residuals, the output features includes fields for observed and predicted dependent variable values, condition number, Local R-squared, explanatory variable coefficients, and standard errors. In a map, the output features are added as a layer and symbolized by the standardized residuals. A positive standardized residual means that the dependent variable value is greater than the predicted value (under-prediction), and a negative standardized residual means that the value is less than the predicted value (over-prediction).

The Intercept, Standard Error of the Intercept, Coefficients, Standard Errors for each of the explanatory variables, Predicted, Residual, Standardized Residual, Influence, Cook's D, Local R-Squared, and Condition Number values are also reported. Many of these fields are discussed in How OLS regression works. The Influence and Cook's D values both measure the influence of the feature on the estimation of the regression coefficients. You can use a histogram chart to determine if a few features are more influential than the rest of the dataset. These features are often outliers that distort the estimation of the coefficients, and the model results may improve by removing them and rerunning the tool. The Local R-squared value ranges from 0 to 1 and represents the strength of the correlations of the local model of the feature. The condition number is a measure of the stability of the estimated coefficients. Condition numbers above approximately 1000 indicate instability in the model; this is usually caused by explanatory variables that are highly correlated with each other.

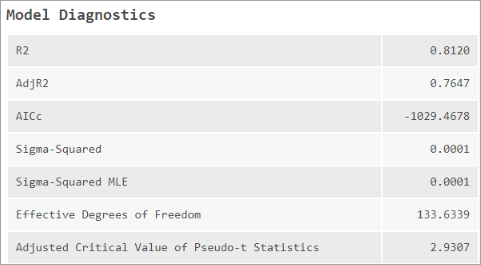

Interpret messages and diagnostics

Analysis details are provided in the messages, including the number of features analyzed, the dependent and explanatory variables, and the number of neighbors specified. In addition, various model diagnostics are reported.

- R2—R-squared is a measure of goodness of fit. Its value varies from 0.0 to 1.0, with higher values being preferable. It may be interpreted as the proportion of dependent variable variance accounted for by the regression model. The denominator for the R2 computation is the sum of squared dependent variable values. Adding an extra explanatory variable to the model does not alter the denominator but does alter the numerator; this gives the impression of improvement in model fit that may not be real. See AdjR2 below.

- AdjR2—Because of the problem described above for the R2 value, calculations for the adjusted R-squared value normalize the numerator and denominator by their degrees of freedom. This has the effect of compensating for the number of variables in a model, and consequently, the adjusted R2 value is almost always less than the R2 value. However, in making this adjustment, you lose the interpretation of the value as a proportion of the variance explained. In GWR, the effective number of degrees of freedom is a function of the neighborhood used, so the adjustment may be marked in comparison to a global model such as that used by the Generalized Linear Regression tool. For this reason, AICc is preferred as a means of comparing models.

- AICc—This is a measure of model performance and can be used to compare regression models. Taking into account model complexity, the model with the lower AICc value provides a better fit to the observed data. AICc is not an absolute measure of goodness of fit but is useful for comparing models with different explanatory variables as long as they apply to the same dependent variable. If the AICc values for two models differ by more than 3, the model with the lower AICc value is considered to be better. Comparing the GWR AICc value to the generalized linear regression (GLR) AICc value is one way to assess the benefits of moving from a global model (GLR) to a local regression model (GWR).

See Gollini et al. in the Additional resources section for the formulas used to compute AICc for all model types.

- Sigma-Squared—This is the least-squares estimate of the variance (standard deviation squared) for the residuals. Smaller values of this statistic are preferable. This value is the normalized residual sum of squares in which the residual sum of squares is divided by the effective degrees of freedom of the residuals. Sigma-Squared is used for AICc computations.

- Sigma-Squared MLE—This is the maximum likelihood estimate (MLE) of the variance (standard deviation squared) of the residuals. Smaller values of this statistic are preferable. This value is calculated by dividing the residual sum of squares by the number of input features.

- Effective Degrees of Freedom—This value reflects a tradeoff between the variance of the fitted values and the bias in the coefficient estimates and is related to the choice of neighborhood size. As the neighborhood approaches infinity, the geographic weights for every feature approach 1, and the coefficient estimates will be very close to those for a global GLR model. For very large neighborhoods, the effective number of coefficients approaches the actual number; local coefficient estimates will have a small variance but will be biased. Conversely, as the neighborhood gets smaller and approaches zero, the geographic weights for every feature approach zero except for the regression point. For extremely small neighborhoods, the effective number of coefficients is the number of observations, and the local coefficient estimates will have a large variance but a low bias. The effective number is used to compute many other diagnostic measures.

- Adjusted Critical Value of Pseudo-t Statistics—This is the adjusted critical value used to test the statistical significance of the coefficients in a two-sided t-test at 95 percent confidence. The value corresponds to a significance level (alpha) of 0.05 divided by the effective degrees of freedom. This adjustment controls the family-wise error rate (FWER) of the significance of the explanatory variables.

Output charts

The tool outputs a scatterplot matrix and a histogram to the Contents pane. The scatterplot matrix includes one dependent variable and up to nine explanatory variables. The histogram displays the deviance residual and a normal distribution curve.

Binary (Logistic)

The binary model type assumes that the values of the dependent variable are binary (0 or 1) values.

Feature class and added fields

The output features contain fields of the Intercept (INTERCEPT), Standard Error of the Intercept (SE_INTERCEPT), Coefficients, and Standard Errors for each of the explanatory variables, as well as the Probability of Being 1, Predicted, Deviance Residual, GInfluence, and Local Percent Deviance values are reported.

Interpret messages and diagnostics

Analysis details are provided in the messages including the number of features analyzed, the dependent and explanatory variables, and the number of neighbors specified. In addition, the following diagnostics are reported:

- % deviance explained by the global model (nonspatial)—This is a measure of goodness of fit and quantifies the performance of a global model (GLR). Its value varies from 0.0 to 1.0, with higher values being preferable. It can be interpreted as the proportion of dependent variable variance accounted for by the regression model.

- % deviance explained by the local model—This is a measure of goodness of fit and quantifies the performance of a local model (GWR). Its value varies from 0.0 to 1.0, with higher values being preferable. It can be interpreted as the proportion of dependent variable variance accounted for by the local regression model.

- % deviance explained by the local model vs global model—This proportion is one way to assess the benefits of moving from a global model (GLR) to a local regression model (GWR) by comparing the residual sum of squares of the local model to the residual sum of squares of the global model. Its value varies from 0.0 to 1.0, with higher values signifying the local regression model performed better than a global model.

- AICc—This is a measure of model performance and can be used to compare regression models. Taking into account model complexity, the model with the lower AICc value provides a better fit to the observed data. AICc is not an absolute measure of goodness of fit but is useful for comparing models with different explanatory variables as long as they apply to the same dependent variable. If the AICc values for two models differ by more than 3, the model with the lower AICc value is considered to be better. Comparing the GWR AICc value to the ordinary least squares (OLS) AICc value is one way to assess the benefits of moving from a global model (OLS) to a local regression model (GWR).

- Sigma-Squared—This value is the normalized residual sum of squares in which the residual sum of squares is divided by the effective degrees of freedom of the residual. This is the least-squares estimate of the variance (standard deviation squared) of the residuals. Smaller values of this statistic are preferable. Sigma-Squared is used for AICc computations.

- Sigma-Squared MLE—This is the MLE of the variance (standard deviation squared) of the residuals. Smaller values of this statistic are preferable. This value is calculated by dividing the residual sum of squares by the number of input features.

- Effective Degrees of Freedom—This value reflects a tradeoff between the variance of the fitted values and the bias in the coefficient estimates and is related to the choice of neighborhood size. As the neighborhood approaches infinity, the geographic weights for every feature approach 1, and the coefficient estimates will be very close to those for a global GLR model. For very large neighborhoods, the effective number of coefficients approaches the actual number; local coefficient estimates will have a small variance but will be biased. Conversely, as the neighborhood gets smaller and approaches zero, the geographic weights for every feature approach zero except for the regression point. For extremely small neighborhoods, the effective number of coefficients is the number of observations, and the local coefficient estimates will have a large variance but a low bias. The effective number is used to compute many other diagnostic measures.

- Adjusted Critical Value of Pseudo-t Statistics—This is the adjusted critical value used to test the statistical significance of the coefficients in a two-sided t-test at 95 percent confidence. The value corresponds to a significance level (alpha) of 0.05 divided by the effective degrees of freedom. This adjustment controls the FWER of the significance of the explanatory variables.

Output charts

A scatter plot matrix as well as box plots and a histogram of the deviance residuals are provided.

Count (Poisson)

The Poisson model type assumes that the values of the dependent variable are counts.

Feature class and added fields

The output features contain fields of the Intercept (INTERCEPT), Standard Error of the Intercept (SE_INTERCEPT), Coefficients and Standard Errors for each of the explanatory variables, as well as the predicted value before the logarithmic transformation (RAW_PRED), Predicted, Deviance Residual, GInfluence, Local Percent Deviance, and Condition Number values.

Interpret messages and diagnostics

Analysis details are provided in the messages including the number of features analyzed, the dependent and explanatory variables, and the number of neighbors specified. In addition, the following diagnostics are reported:

- % deviance explained by the global model (nonspatial)—This is a measure of goodness of fit and quantifies the performance of a global model (GLR). Its value varies from 0.0 to 1.0, with higher values being preferable. It can be interpreted as the proportion of dependent variable variance accounted for by the regression model.

- % deviance explained by the local model—This is a measure of goodness of fit and quantifies the performance of the local model (GWR). Its value varies from 0.0 to 1.0, with higher values being preferable. It can be interpreted as the proportion of dependent variable variance accounted for by the local regression model.

- % deviance explained by the local model vs global model—This proportion is one way to assess the benefits of moving from a global model (GLR) to a local regression model (GWR) by comparing the residual sum of squares of the local model to the residual sum of squares of the global model. Its value varies from 0.0 to 1.0, with higher values signifying the local regression model performed better than a global model.

- AICc—This is a measure of model performance and can be used to compare regression models. Taking into account model complexity, the model with the lower AICc value provides a better fit to the observed data. AICc is not an absolute measure of goodness of fit but is useful for comparing models with different explanatory variables as long as they apply to the same dependent variable. If the AICc values for two models differ by more than 3, the model with the lower AICc value is considered to be better. Comparing the GWR AICc value to the OLS AICc value is one way to assess the benefits of moving from a global model (OLS) to a local regression model (GWR).

- Sigma-Squared—This value is the normalized residual sum of squares in which the residual sum of squares is divided by the effective degrees of freedom of the residual. This is the least-squares estimate of the variance (standard deviation squared) of the residuals. Smaller values of this statistic are preferable. Sigma-Squared is used for AICc computations.

- Sigma-Squared MLE—This is the MLE of the variance (standard deviation squared) of the residuals. Smaller values of this statistic are preferable. This value is calculated by dividing the residual sum of squares by the number of input features.

- Effective Degrees of Freedom—This value reflects a tradeoff between the variance of the fitted values and the bias in the coefficient estimates and is related to the choice of neighborhood size. As the neighborhood approaches infinity, the geographic weights for every feature approach 1, and the coefficient estimates will be very close to those for a global GLR model. For very large neighborhoods, the effective number of coefficients approaches the actual number; local coefficient estimates will have a small variance but will be biased. Conversely, as the neighborhood gets smaller and approaches zero, the geographic weights for every feature approach zero except for the regression point. For extremely small neighborhoods, the effective number of coefficients is the number of observations, and the local coefficient estimates will have a large variance but a low bias. The effective number is used to compute many other diagnostic measures.

- Adjusted Critical Value of Pseudo-t Statistics—This is the adjusted critical value used to test the statistical significance of the coefficients in a two-sided t-test at 95 percent confidence. The value corresponds to a significance level (alpha) of 0.05 divided by the effective degrees of freedom. This adjustment controls the FWER of the significance of the explanatory variables.

Output charts

A scatter plot matrix is provided in the Contents pane (including up to 19 variables) as well as a histogram of the deviance residual and normal distribution line.

Other implementation notes and tips

In global regression models, such as GLR, results are unreliable when two or more variables exhibit multicollinearity (when two or more variables are redundant or together tell the same story). The Geographically Weighted Regression tool builds a local regression equation for each feature in the dataset. When the values for a particular explanatory variable cluster spatially, you will likely have problems with local multicollinearity. The condition number in the output features indicates when results are unstable due to local multicollinearity. Be skeptical of results for features with a condition number larger than 30, equal to Null, or, for shapefiles, equal to -1.7976931348623158e+308. The condition number is scale-adjusted to correct for the number of explanatory variables in the model. This allows direct comparison of the condition number between models using different numbers of explanatory variables.

Model design errors often indicate a problem with global or local multicollinearity. To determine where the problem is, run the Geographically Weighted Regression tool and examine the VIF value for each explanatory variable. If some of the VIF values are large (above 7.5, for example), global multicollinearity is preventing the tool from solving. More likely, however, local multicollinearity is the problem. Try creating a thematic map for each explanatory variable. If the map reveals spatial clustering of identical values, consider removing those variables from the model or combining those variables with other explanatory variables to increase value variation. If, for example, you are modeling home values and have variables for bedrooms and bathrooms, you can combine these to increase value variation or to represent them as bathroom/bedroom square footage. Avoid using spatial regime artificial or binary variables for Gaussian or Poisson model types, spatially clustering categorical or nominal variables with the logistic model type, or variables with few possible values when constructing GWR models.

Problems with local multicollinearity can also prevent the tool from resolving an optimal distance band or number of neighbors. Try specifying manual intervals, a user-define distance band, or specific neighbor counts. Then examine the condition numbers in the output features to see which features are associated with local multicollinearity problems (condition numbers larger than 30). You may want to remove these features temporarily while you find an optimal distance or number of neighbors. Keep in mind that results associated with condition numbers greater than 30 are not reliable.

Additional resources

There are a number of resources to help you learn more about GLR and GWR. Start with Regression analysis basics or work through the Regression Analysis tutorial.

The following are also helpful resources:

Brunsdon, C., Fotheringham, A. S., & Charlton, M. E. (1996). "Geographically weighted regression: a method for exploring spatial nonstationarity". Geographical analysis, 28(4), 281-298.

Fotheringham, Stewart A., Chris Brunsdon, and Martin Charlton. Geographically Weighted Regression: The analysis of spatially varying relationships. John Wiley & Sons, 2002.

Gollini, I., Lu, B., Charlton, M., Brunsdon, C., & Harris, P. (2015). "GWmodel: An R Package For Exploring Spatial Heterogeneity Using Geographically Weighted Models." Journal of Statistical Software, 63(17), 1–50.https://doi.org/10.18637/jss.v063.i17.

Mitchell, Andy. The ESRI Guide to GIS Analysis, Volume 2. ESRI Press, 2005.

Nakaya, T., Fotheringham, A. S., Brunsdon, C., & Charlton, M. (2005). "Geographically weighted Poisson regression for disease association mapping". Statistics in medicine, 24(17), 2695-2717.

Páez, A., Farber, S., & Wheeler, D. (2011). "A simulation-based study of geographically weighted regression as a method for investigating spatially varying relationships". Environment and Planning A, 43(12), 2992-3010.